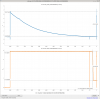

To see if the OM2/beckhoff coupling is a direct electronics coupling or not, we've done A-B-A test while the fast shutter was closed (no meaningful light on the DCPD).

State A (should be quiet): 2023 Oct/10 15:18:30 UTC - 16:48:00 UTC. The same as the last observing mode. No electrical connection from any pin of the Beckhoff cable to the OM2 heater driver chassis. Heater drive voltage is supplied by the portable voltage reference.

State B (might be noisy): 16:50:00 UTC - 18:21:00 UTC. The cable is directly connected to the OM2 heater driver chassis.

State A (should be quiet): 18:23:00- 19:19:30 UTC or so.

DetChar, please directly look at H1:OMC-DCPD_SUM_OUT_DQ to find combs.

It seems that even if the shutter is closed, once in a while very small amount of light reaches DCPDs (green and red arrows in the first attachment). One of them (red arrow) lasted long and we don't know what was going on there. One of the short glitches was caused by BS momentarilly kicked (cyan arrow) and scattered light in HAM6 somehow reached DCPDs, but I couldn't find other glitches that exactly coincided with optics motion or IMC locked/unlocked.

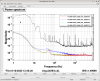

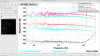

To give you a sense of how bad (or not) these glitches are, 2nd attachment shows the DCPD spectrum of a quiet time in the first State A period (green), strange glitchy period indicated by the red arrow in the first attachment (blue), a quiet time in State B (red) and during the observing time (black, not corrected for the loop).

FYI, right now we're back to State A (should be quiet). Next Tuesday I'll inject something to thermistors in chamber. BTW 785 was moved in front of the HAM6 rack though it's powered off and not connected to anything.

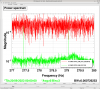

Here is a plot showing the effect of the cleaning that is currently ongoing. Since Robert was able to significantly mitigate the 120 Hz peak yesterday, there is not much difference between the strain channel and the cleaned channel there anymore. But, our LSC FF needs tuning (measurements to be taken later this commissioning period), so there's lots of effect down there.

Both the Jitter and LSC noises were retrained on data from our most recent lock. The high frequency laser noise I havne't retrained in several weeks, and it's still doing quite nicely.

This quiet period ended at 20:22 UTC (for calibration measurements).

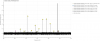

Attached is the range plot from the control room wall, where we can see the improvement due to the cleaning being engaged.

Since we have lost lock, I re-accepted in the h1oaf Observe.snap file the correct value of 0 gain for H1:OAF-NOISE_WHITENING_GAIN, so NonSENS will be off (without any SDF diffs) when we get relocked.