TITLE: 10/05 Eve Shift: 23:00-07:00 UTC (16:00-00:00 PST), all times posted in UTC

STATE of H1: Observing at 151Mpc

INCOMING OPERATOR: Ryan C

SHIFT SUMMARY:

- Arrived with H1 locked, waiting for ADS to converge

- EX saturation @ 0:35

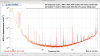

- DM 102 alert for PSL102 500 nm, trend attached, looks like the counts got up to 100, will monitor

- Also had an alert on DM 6 in the LVEA (HAM 6 area), 300 nm, peak counts at 140, looks to have calmed back down shortly after

- 1:43 - EQ mode activated, no alert from verbal for any EQ, peakmon counts are hovering around 6-700

- Looking at USGS, there did seem to be a small EQ from Alaska ~1:39 UTC, so I'm assuming this was the cause of the ground motion

- Another EQ alert @ 1:50, this was a 6.1 from Japan, though according to USGS...we'll see if we can make it through this one

- Looks like we made it, back to CALM @ 2:46

- 3:51 - another EQ, this one was a 5.4 from Panama

- 4:03 EQ mode activated, back to CALM @ 4:13

- and another from Japan @ 6:05, 5.6

LOG:

No log for this shift.