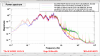

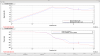

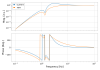

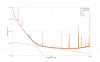

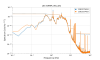

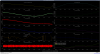

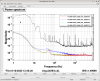

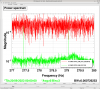

Vicky and I noticed that there seems to be an almost shelf-like structure with an upper frequency of about 55 Hz, that was visible when we had just acquired lock. I had a quick look at the coherence between DARM (Cal-deltal_external) and various channels (right side of attachment), and it's clear that there is a significant amount of coherence with LSC channels (top left frame of the right side of the attachment).

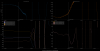

Mostly to see if I could, I ran a quick training of the LSC NonSENS subtraction, and turned it on for a minute or so just before we went to Observing. The effect of the nonsens cleaning (with freshly trained LSC MICH and SRCL subtraction) at the beginning of the lock is on the left side of the attachment, and it looks roughly like the subtraction is flattening out that shelf-like structure.

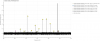

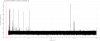

An hour or so into the lock, and all of that coherence is completely gone (as Gabriele pointed out earlier today). My take away is that our LSC FF is not well-tuned for the first part of the thermalization of the lock. That may be something that we just live with, but I thought it was interesting to note that indeed part of the reason our range increases over the first hour or so of a lock is that we're thermalizing into the coupling function that the FF is tuned for.