TITLE: 10/03 Eve Shift: 23:00-07:00 UTC (16:00-00:00 PST), all times posted in UTC

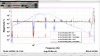

STATE of H1: Observing at 154Mpc

INCOMING OPERATOR: Ryan S

SHIFT SUMMARY:

- Arrived to a relocking IFO, back to NLN @ 0:12, OBSERVE @ 0:33 UTC

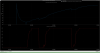

- PI 31 ringups @ 0:14 - successfully damped by guardian

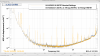

- 0:33 - inc 5.5 EQ from Japan - back to CALM @ 1:08

- EX saturation @ 0:51

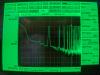

- 1:30 - Saturations on PR2/SR2/MC2 - have never seen these before

- PR2 comes from PRM, SR2 comes from SR3, and MC2 comes from MC1, so unless there is some resonance between these suspensions that I don't know about, I'm inclined to think this could possibly have been a glitch

- Lockloss @ 3:09

- Relocking was smooth (had a slight issue with DRMI going to CHECK MICH, but otherwise ok), back to NLN @ 4:13, OBSERVE @ 4:28

- 3:54 - inc 5.7 EQ from Philippines

- 5:13 - inc 5.7 EQ this one from Japan again - 5:34 EQ mode activated/back to CALM @ 5:44

- EX saturations @ 4:31/5:49

- The H1EDC is reading red with 12 channels - all pertaining to nuc26 - Tagging CDS

LOG:

No log for this shift.