Summary:

We aligned everything such that none of 8 PDs was excellent but all were OK (we were also able to set up such that 4 pds were excellent but a few were terrible but decided not to take that), we were preparing for putting the array in storage until the installation, only to find that something is wrong with the design of the asymmetric QPD clamp D1300963-V2. It's unusable as is.

QPD clamp doesn't constrain the position of the QPD laterally, and there's a gross mismatch between the position of properly aligned QPD and that of the center hole of the QPD clamp. Because of that, when QPD is properly positioned, one of the QPD pins will touch the QPD clamp and be grounded unless the QPD connector is fixed such a way to pull the QPD pins sideways. Fortunately but sadly, the old non-tilt QPD clamp D1300963-V1 works better, so we'll use that.

Another minor issue, is that there seems to be a confusion as to the direction of the QPD tilt in terms of the word "pitch" and "yaw". The way the QPD is tilted in D1101059-v5 (this is how things are set up in the lab as of now) doesn't seem to follow the design intent of ECR E1400231 though it follows the word of it. After confirming that this is the case with systems, we'll change the QPD tilt direction (or not). This means that we're not ready to put everything in storage quite yet.

None of these affect the PD array alignment we've done, this is just a problem of the QPD.

Pin grounding issue due to the QPD clamp design.

I loosened the screws for the QPD connector clamps (circled in blue in the first attachment) and the output of the QPD preamp got crazy with super large 60Hz noise and large DC SUM even though there was no laser light.

I disconnected the QPD connector, removed the connector clamps too, and found that one pin of the QPD was short circuited to the ground via the QPD clamp (not to be confused with the QPC connector clamps, see 2nd attachment).

Turns out, the offending pin was isolated during our adjustments all the time because the QPD connector clamps were putting enough lateral pressure as well as down such that the pins were slightly bent from the offending side. I was able to reattach the connector, push it laterally while tightening the clamp screws, and confirm that the QPD functioned fine. But this is not really where we wanted to be.

I rotated the QPD clamp 180 degrees (which turns out to make more sense judging from the drawings in the first attachment), which moved the QPD. Since the beam radius is about 0.2mm, if the QPD moves by 0.2mm it's not useful as a reference of the in-lab beam position. I turned the laser on, repositioned the QPD back to where it should be, but the pin on the opposite side started touching. (Sorry no picture.)

I put the old non-tilt version clamp and it was much, much better (attachment 3). It's annoying because the screw holes don't have an angled recess. The screw head is tilted relative to the mating surface on the clamp, contacting at a single point, and tightening/loosening the screw tend to move the QPD. But it's possible to carefully tighten one screw a bit, then the other one a bit, repeat that dozen times or so until nothing moves even when pushed firmly by finger. After that, you can still move the QPD by tiny amounts by tapping the QPD assy by bigger Allen key. Then tighten again.

What's going on here?

In the 4th attachment, you can see that the "center" hole of the QPD clamp is offset by 0.55" (1.4mm) in the direction orthogonal to A-A, and about 0.07" (even though this number is not specified anywhere in the drawing) or 1.8mm in A-A direction. So the total lateral offset is sqrt(1.4^2+1.8^2)~2.3mm. OTOH, the QPD assy is only 0.5" thick, so the lateral shift arising from the 1.41deg tilt at the back of the QPD assy is just 1.41/180*pi*0.5=0.0123" or 0.3mm.

Given that the beam position relative to the array structure is determined by the array itself and not by how the QPD is mounted, 2.3mm lateral shift is impossibly large, something must be wrong in the design. The 5th attachment is a visual aid for you.

Anyway, we'll use the old clamp, it's not worth designing and manufacturing new ones at this point.

QPD tilt direction.

If you go back to the first attachment, the QPD is tilted in a direction indicated by a red "tilt" arrow in the lab as we just followed the drawing.

The ECR E1400231 says "We have to tilt the QPD 1 deg in tip (pitch) and 1 deg in tilt (yaw)" and it sounds as if it colloborates with the drawing.

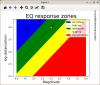

However, I suspect that "pitch" and "yaw" in the above sentence might have been misused. In the right figure of the 6th attachment (screeshot of ECR unedited), it seems that the QPD reflection hits the elevator (the red 45 degree thing in the figure) at around 6 O'clock position around the eliptic exit hole, which means that the QPD is tilted in its optical PIT. If it's really tilted 1 degree in optical PIT and 1 degree in optical YAW, the reflection will hit something like 7:30 position instead of 6:00.

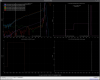

That makes sense as the design intent of the ECR is to make sure that the QPD reflection will not go back into the exit hole. The 7th attachment is a side view I made, red lines represent the IR beams, yellow lines the internal hole(s) in the elevator, and green lines the aperture of the two eliptical exit holes. Nothing is to scale, but hopefully you agree that, in order to steer the QPD reflection outside of the exit hole aperture, PIT UP requires the largest tilt and PIT DOWN requires the least tilt. We have a fixed tilt of QPD, so it's best to PIT DOWN, that's what I read from the ECR. If you don't know which angle is bigger or smaller, see attachment 8.

Anyway, I'll ask Callum if my interpretation is correct, and will act accordingly.