Alan Knee, Beverly Berger

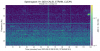

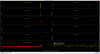

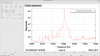

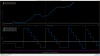

A newly regular ground motion has appeared in the corner station, which was first noticed in the 10-30 Hz SEI/BLRMS (figure 1) and some of the CS ACC floor sensors (figure 2, notice the short blips near 30 Hz). They appear consistently every 80 or so minutes. It's getting picked up by varous other sensors, including in SUS/OpLev/BLRMS pitch for SR3 and ITMX (figure 3) and yaw for ITMX in the same frequency range, and a PSL table microphone (figure 4).

I created spectrograms for H1:PEM-CS_ACC_LVEAFLOOR_XCRYO_Z_DQ (figure 5) and H1:GDS-CALIB_STRAIN_CLEAN (figure 6) over a 2 hour period starting Sep 22 at 8:00 UTC, which shows that this noise is somehow coupling to the strain channel, though it is fairly faint.

The feature in the SEI/BLRMS channel has appeared from time to time although not with the frequency and regularity seen now. It seems to have started around 23:00 UTC on Sep 21, but persisted through Sep 22 and 23. It's still present today (Sep 24), though its cadence has seemingly changed (figure 7).