TITLE: 09/23 Eve Shift: 23:00-07:00 UTC (16:00-00:00 PST), all times posted in UTC

STATE of H1: Aligning

INCOMING OPERATOR: Corey

SHIFT SUMMARY: Majority of my shift was quiet. We were out of Observing for less than two minutes due to the TCS ITMX CO2 laser unlocking (73061), and then at 05:39UTC we lost lock (73064) and had saturations in L2 of all ITMs/ETMs that delayed relocking. We are currently in INITIAL_ALIGNMENT with Corey helping us lock back up.

23:00UTC Detector Observing and Locked for7.5hours

23:40 Taken out of Observing by TCS ITMX CO2 laser unlocking (73061)

23:42 SDF Diffs cleared by themselves and we went back into Observing

05:39 Lockloss (73064)

LOG:

no log

Right after lockloss DIAG_MAIN was showing:

- PSL_ISS: Diffracted power is low

- OPLEV_SUMS: ETMX sums low

05:41UTC LOCKING_ARMS_GREEN, the detector couldn't see ALSY at all and I noticed the ETM/ITMs L2 saturations(attachment1-L2 ITMX MASTER_OUT as example) on the saturation monitor(attachment2) and noticed the ETMY oplev moving around wildly(attachment3)

05:54 I took the detector to DOWN, and immediately could see ALSY on the cameras and ndscope; L2 saturations were all still there

05:56 and 06:05 I went to LOCKING_ARMS_GREEN again and GREEN_ARMS_MANUAL, respectively, but wasn't able to lock anything - I was able to go to LOCKING_ARMS_GREEN and GREEN_ARMS_MANUAL without the saturations getting too bad but both ALSX and Y evenually locked for a few seconds each and then unlocked and gone to basically 0 on the cameras and ndscope(attachment4). ALSX and Y sometime after this went to FAULT and were giving the messages "PDH" and "ReflPD A"

06:07 Tried going to INITIAL_ALIGNMENT but Verbal kept calling out all of the optics as saturating, ALIGN_IFO wouldn't move past SET_SUS_FOR_ALS_FPMI, and the oplev overview screen showed ETMY, ITMX, and ETMX moving all over the place (I was worried something would go really wrong if I left it in that configuration)

I tried waiting a bit and then tried INITIAL_ALIGNMENT and LOCKING_ARMS_GREEN again but had the same results.

Naoki came on and thought the saturations might be due to the camera servos, so he cleared the camera servo history and all of the saturations disappeared (attachment5) and an INITIAL_ALIGNMENT is now running fine.

Not sure what caused the saturations in the camera servos, but it'll be worth looking into whether the saturations are what ended up causing this lockloss.

As shown in the attached figure, the output of camera servo for PIT suddenly became 1e20 and the lockloss happened after 0.3s. So this lockloss is likely due to the camera servo. I checked the input signal of camera servo. The bottom left is the input of PIT1 and should be same as the BS camera output (bottom right). The BS camera output itself looks OK, but the input of PIT1 became red dashed and the output became 1e20. The situation is same for PIT2, 3. I am not sure why this happened.

Opened FRS29216 . It looks like a transient NaN on ETMY_Y camera centroid channel caused a latching of 1e20 on the ASC CAM_PIT filter modules outputs.

Camilla, Oli

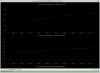

We trended the ASC-CAM_PIT{1,2,3}_INMON/OUTPUT channels alongside ASC-AS_A_DC_NSUM_OUT_DQ(attachment1) and found that according to ASC-AS_A_DC_NSUM_OUT_DQ, the lockloss started(cursor 1) ~0.37s before PIT{1,2,3}_INMON read NaN(cursor 2), so the lockloss was not caused by the camera servos.

It is possible that the NaN values are linked to the light dropping off of the PIT2 and 3 cameras right after the lockloss, as when the cameras come back online ~0.2s later, both the PIT2 and PIT3 cameras are at 0, and looking back over several locklosses it looks like these two cameras tend to drop to 0 between 0.35 and 0.55s after the lockloss starts. However the PIT1 camera is still registering light for another 0.8s after coming back online(typical time for this camera).

Patrick updated the camera server to solve the issue in alog73228.