Summary

I found vibration coupling associated with motion of the HAM4 ISI (at a few times background), BSC2 ST2 motion (at about 10 times background), and, likely, on the chamber walls of the ITMs (accounting for much of DARM in the 20 Hz region). The coupling associated with HAM4 may be due to reflection of the 45 degree annular beams from the BS and its cage, and may be mitigated by BBS installation and table baffles at HAM4. The coupling at the chamber walls of the ITMs may be due to the 20 degree annular beam from the ITM bevels, which would be mitigated by installation of cage baffles on the ITMs. However, I would like some more commissionsing time to be more sure of this.

Recently, broad-band non-linear vibration coupling in the corner station was revealed by investigations of the coupling of HVAC components to DARM (86412). This is an update on searches for the site of that coupling.

We check for sites on the internal tables (ISIs) by shaking individual ISIs or HPIs. Discriminating between sites on the vacuum enclosure is more difficult because shaking at one location tends to shake many vacuum chambers about the same amount. To identify an enclosure site, we use frequency dependance and propagation delays (velocities on these steel membranes are only 100s of m/s). The basic idea is that if a patch of chamber wall is producing noise by reflecting scattered light back into the interferometer, then an accelerometer that is placed on the outside of that patch will, comapared to other accelerometers, produce a signal that is precisely correlated with the signal in DARM.

Internal tables

I eliminated most of the tables in the LVEA either by injecting into the ISI control loops or by monitoring their motion during external injections. However, I did find coupling at the HAM4 ISI and the BS ISI.

Coupling at HAM4 ISI

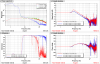

Figure 1 shows that we found coupling at the HAM4 ISI. An increase in Y-axis motion of about 30 produced a feature in DARM that was several times background. This coupling appeared mainly linear and so was not the coupling we were looking for. A potential source of this coupling is reflection of the 45 degree annular beam from the beamsplitter that illuminates this table (83050). The BBS, its less reflective cage and planned table baffling may mitigate this coupling.

Coupling with motion of ST2 of the BS ISI

Figure 2 shows that I produced noise in DARM by shaking the BS ISI. I did a series of injections that suggest that noise in DARM is produced by motion of BS ISI ST2 (where the cage is attached), but not motion of ST0 (where the eliptical baffles are attached) or of the BS itself. This noise may be associated with the 45 degree annular beam from the BS (83050) and may be reduced with the new BBS cage, which is less reflective.

Vacuum enclosure

I have been using three techniques to find coupling sites on the inside walls of the vacuum enclosure. These tests, while ongoing, have narrowed down the non-linear coupling to the enclosure walls in the vertex.

1) Shaker and speaker sweeps from multiple locations

Shaker sweeps are used in two ways. First, frequency consistency - if an accelerometer is mounted at the coupling site, and shows a resonance at some frequency, then there should be an indication of greater motion in DARM at that frequency also. Second, consistency for vibration injections from multiple locations. Thus if the accelerometer is mounted at the coupling site, and it moves less for injections onto the mode cleaner tube than onto BSC8, then DARM should also be less affected by the SR tube injection. Figure 3 illustrates this for one of the sweep pairs.

The most consistent accelerometer locations in frequency: ITMX, ITMY and BS chamber walls

The most consistent accelerometer locations in response to different shaker locations: ITMX, ITMY and BS chamber walls

2) Beating shakers technique

The Beating Shaker technique (52184) uses differences in propagation time from different shaker locations to locate the coupling site. When two shakers inject at two slightly different frequencies (e.g. 35.005 Hz and 35 Hz), the beat envelope will have a different phase at different locations due to propagation delays. If the accelerometer is at the coupling site, its beat enveope will be in phase with DARM’s for any shaking location.

The beat envelope in DARM was not as clear as it has been for past uses of the Beating Shaker technique, because of the side bands. So I fit a simulated beat envelope using a cross correlation technique. This is illustrated in Figure 4. The best accelerometers for beat consistency were ITMY–Y, ITMY-X and ITMX-Z. I think it might be useful for DetChar or others to search for an ASC motion that could account for the side bands during the injection period shown in Figure 4.

3) Hand held mini-shaker

A small shaker made of a speaker with an attached reaction mass (Figure 5) is used to take advantage of the large amplitude near-field region right at the shaker in an attempt to find a region on the vacuum enclosure where the shaker coupling dramatically increases. This technique eliminated the BSC7 potential sites and I hope to use it to test the 20 degree ITM beam hypothesis in future commissioning sessions.

Since our accelerometer array has low spatial resolution (something to think about for CE) we also mount temporary accelerometers as we narrow in on a site. This is the stage I am at, mounting accelerometers to further narrow the site. However, the results so far are consistent with coupling of the 20 degree beam from the ITM bevels (83050) . These annular beams were elimited by the cage baffles at LLO and we plan on installing them at LHO.