TITLE: 09/14 Eve Shift: 23:00-07:00 UTC (16:00-00:00 PST), all times posted in UTC

STATE of H1: Observing at 142Mpc

INCOMING OPERATOR: Ryan S

SHIFT SUMMARY:

IFO is in NLN and OBSERVING as of 06:04 UTC

Lock acquisition was fully automatic, but had to go through PRMI and MICH_FRINGES.

3 IY SDF diffs accepted as per Jenne's instruction (screenshotted)

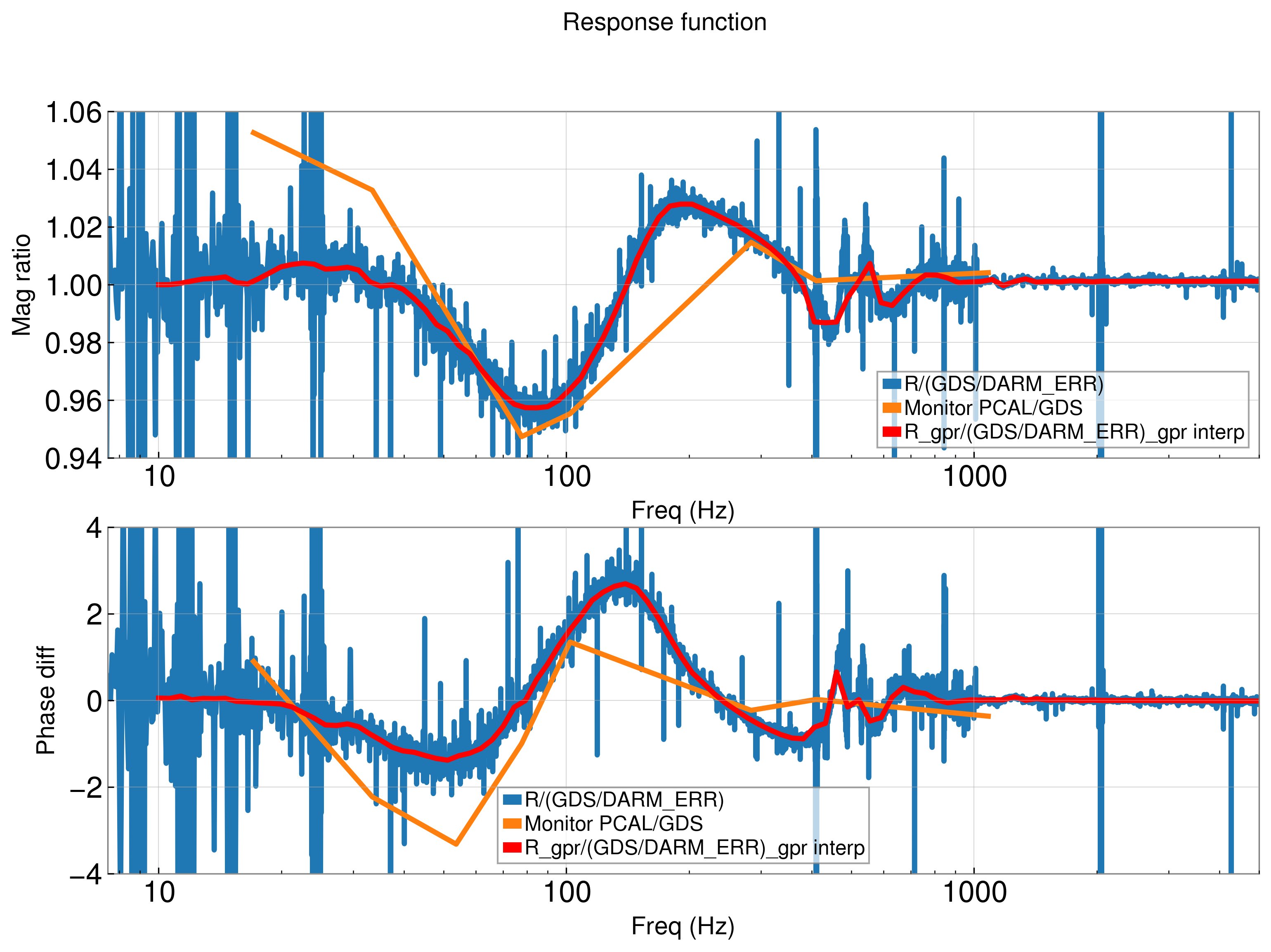

Lockloss Investigation (alog 72875)

“EX” Saturation one second before the lockloss.

The lockloss happened at 04:45:48 UTC. In order to find the so-called “first cause”, I realized that there was an EX saturation at 04:45:47. I went and checked the L3 actuators since those were the highest (blinking yellow) on the saturation monitor.

Upon trending this lockloss, I found that there was indeed a very big actuation at 04:45:47 at around the same time for all 4 ESDs. The fact that they were also in the same millisecond tells me that they were all caused by something else. (Screenshot "EXSaturation")

More curious however is that there was a preliminary negative “kick” about half a second before (1.5 seconds pre-lockloss at 04:45:46.5 UTC). This wasn’t a saturation but contributes to instability in EX (I’m assuming). This “kick” did not happen regularly before the saturation so I think may be relevant to the whole mystery. All of these also happened at the same time (to the nearest ms) and all had the same magnitude of ~-330,000 cts. I think that this half a second kick was caused by something else since it’s the same magnitude and time for all 4.

It’s worth noting that this preliminary kick was orders of magnitude higher (lower) than the EX activity leading up to it (Screenshot "EXSaturation2") and the saturation was many orders of magnitude higher than that one, which went up to 1*1011 counts.

It is equally worth noting that EX has saturated 5 separate times since the beginning of the shift:

- 00:43:44 UTC

- 01:52:23 UTC

- 02:04:00 UTC

- 04:33:33 UTC (12 mins pre-lockloss)

- 04:45:47 UTC (1 second pre-lockloss)

Now the question becomes: was it another stage of “EX” or was it something else?

First, we can trend the other stages and see if there is any before-before-before lockloss behavior. (This becomes relevant later so I’ll leave this investigation for now).

Second, we can look at other fun and suspicious factors:

- One such “other” factor is the glitch that started it all - a big peak showing up on all the bands of the BLRMs. Going into all of them, we can actually see that the same type of pre-peak “kick” is there. Though this happens pretty much exactly when the lockloss occurs, at 4:45:48 UTC, implying that this was not the cause (Screenshot "BLRMSringup" 3). A note is that the kick that coincided with the lockloss is just a vertical line in the picture, but that is because it was much higher in magnitude compared to the preliminary kick, which again, was much higher in magnitude to all of the motion happening leading up to it. Potentially relevant here is that the 38-60Hz band sees this first (4:45:48 UTC) and the 10-20Hz band sees this last, about a second later (4:45:49 UTC).

- Another “other” factor is that upon opening up this issue briefly with TJ today, we found that the OMC DCPD saw yesterday’s lockloss (alog 72852) kick issue first, so this is worth investigating aside from the discussion above.

This leads me to believe that whatever caused the glitch behavior may be related to the EX saturation, which begs the question: Have the recent EX saturations all prompted these BLRM all-bands glitches? Let’s find out.

Matching the EX saturation timestamps above:

Looking at the saturation 12 mins before, there was indeed a ring up, this time with 20-34 Hz band being first and highest in magnitude (Screenshot "EXSaturation4"). It’s worth saying that this rang up much less (I had to zoom in by many orders of magnitude to see the glitch behavior), which makes sense because it didn’t cause a lockloss but also tells us that these locklosses are particularly aggressive if:

- When we do lose lock, we have to go all the way to MICH_FRINGES in order to align properly (and yesterday even that didn’t work).

- Even the EX saturations that cause these glitches that don’t cause locklosses are orders of magnitudes lower than the ones that do.

Anyway, all EX saturations during this shift caused this behavior in the BLRMs screen. All of the non-lockloss causing ones were about 1*108 times lower in magnitude than this one.

This all isn’t saying much other than that an EX ring-up in DARM will show up in DARM.

But, now we have confirmed that these glitches seem to be happening due to EX saturations so let’s find out if this is actually the case. So far, we know that a very bad EX saturation happens, the BLRMs screen lights up, and then we lose lock. This splits up our question yet again:

Does something entirely different (OMC perhaps) cause EX to saturate or is the saturation happening and caused within another EX stage? (Our “first” from before) We can use our scope archeology to find out more.

But sadly, not today because it’s close to 1AM now - though the investigation would continue as such:

- Trend EX actuators and see when the “first kick” happens. Remember our first evidence of anything is 04:45:46.5 UTC in L3 but all at the same time.

- If one actuator is clearly first, this supports the claim that that specific actuator is the problem

- If none are first, then it is likely something else

- Trend OMC DCPD channels in a similar manner to EX

- Do “all of the above” for locklosses of a similar breed (yesterday’s would be a good one).

- Continue exploring “first causes” among those locklosses if nothing else yields conclusive answers.

I will continue investigating this in tomorrow’s shift.

P.S. If my reasoning is circular or I’m missing something big, then burst my bubble as slowly as possible so I could maximize the learning (and minimize the embarrassment)

LOG:

None