Vicky, Naoki, Sheila, Daniel

Details of homodyne measurement:

This morning Daniel and Vicky reverted the cable change to allow us to lock the local oscillator loop on the homodyne (undoing change described in 69013). Vicky then locked the OPO on the seed using the dither lock, and increased the power into the seed fiber to 75mW (it can't go above 100mW for the safety of the fiber switch). We then reduced the LO power so that the seed and LO power were matched on PDA, and adjusted the alignment of the sqz path to get good (~97%) visibility measured on PDA. We removed the half wave plate from the seed path, without adjusting the rotation. With it removed, we checked the visibility on PDB, and saw that the powers were imbalanced.

Polarization issue (revisiting the polarization of sqz beam, same conclusion as previous work):

There is a PBS in the LO path close to the homodyne, so we believe that the polarization should be set to horizontal at the beamsplitter in that path. The LO power on the two PDs is balanced (imbalanced by 0.4%), so we believe this means that the beamsplitter angle was set correctly for p polarized light as we found it, and there is no need to adjut the beamsplitter angle. However, when we switched to the seed power, there is a 10% difference between the power on the two PDs without the halfwave plate in the path. We put the halfwave plate back, and the powers were again balanced (with the HWP angle as we found it). We believe this means that the polarization of the sqz path is not horizontal arriving at the homodyne, and that the half wave plate is restoring the polarization to horizontal. If the polarization rotation is happening on SQZT7, the half wave plate should be able to mitigate the problem, if it's happening in HAM7 it will look like a loss for squeezing in the IFO. Vicky re-adjusted the alignment of the sqz path after we put the HWP back in, because it slightly shifts the alignment. After this the visibility measured on PDA is 95.7% (efficiency of 91.6%) and on PDB visibility is 96.9% (efficiency of 93.9%).

SQZ measurements, unclipping:

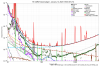

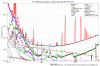

While the IFO was relocking Vicky and Naoki measured SQZ, SN, ASQZ and mean SQZ on the homodyne and found 4.46dB sqz, 10.4dB mean sqz and 13.14dB anti-sqz measured from 500-550Hz. Vicky then checked for clipping, and saw some evidence of small clipping (order 1% clipping with 10urad yaw dither on ZM2). We went to the table to check that the problem wasn't in the path to the IR PD and camera, we adjusted the angle of the 50/50 beamsplitter that sends light to the camera, and set the angle of the camera to be more normal to the PD path. This improved the image quality on the camera. Vicky moved ZM3 to reduce the clipping seen by the IR PD slightly. She restored good visibility by maximizing the ADF, and also adjusted both PSAMs, moving ZM4 from 100V to 95V. (We use different PSAMs for the homodyne than the IFO). After this, she re-measured sqz at 800-850Hz: 5.2dB sqz, 13.6dB anti-sqz, and 10.6dB mean sqz.

Using the nonlinear gain of 11 (Naoki and Vicky checked it's calibration yesterday), and the equations from aoki, this sqz/asqz level implies total efficiency of 0.72 without phase noise, the mean sqz measurement implies a total efficiency of 0.704. From the sqz loss spreadsheet we have 6.13% known HAM7 losses, if we also use the lower visibility measured using PDA we should have a total efficiency for the homodyne of 0.916*0.9387 = 0.86. This means that we would infer an extra 16-18% losses from these homodyne measurements, which seems too large for homodyne PD QE and optics losses in the path. Since we believe that the polarization issue is reflected in the visibility, this means that these are extra losses in addition to any losses the IFO sees due to the polarization issue.

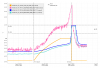

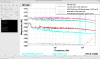

Screenshot from Vicky shows the measurement made including the dark noise.