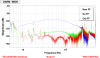

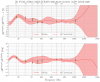

Inspired by Valera's alog demonstrating the improvement in the LLO sensitivity from O3b to O4a (LLO:66948), I have made a similar plot.

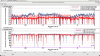

I chose a time in February 2020 for the O3b reference. I'm not aware of a good time without calibration lines, so I used the CALIB_STRAIN traces from both times. Our range today just got a new bump up, either due to the commissioning work today or the EY chiller work (72414). Note: I am quoting the GDS calib strain range!

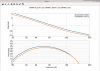

I am adding a second plot showing O3a to O4 a as well, using a reference time from May 2019.

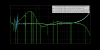

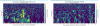

There is a significant amount of work that gave us this improvement. I will try to list what I can recall:

- ITMY replacement in 2021 to remove large point absorber

- Removal of septum window, new output faraday isolator, new OM2 TSAMS

- Addition of filter cavity for frequency dependent squeezing- squeezing levels around 3.7 dB (or more)

- Increase in circulating arm power ~200kW to 375kW

- Commissioning of camera servos- no more 20 Hz dither lines

- Reduction of ASC loop bandwidths, commissioning of HAM1 feedforward

- Reduction of LSC loop bandwidths, improvement of LSC feedforward

- Improvement of HAUX, HSTS and HLTS damping loops, reduced injection of BOSEM noise

- Damping of various baffles

- HAM1 table work to clamp RM blade springs, power attenuation on POP and REFL to allow power up

- Optimized test mass ESD biases to minimize electromagnetic interference coupling, improved grounding at end stations and corner to reduce ground loop noise

- Ring heater and CO2 laser commissioning to reduce frequency noise coupling and contrast defect