Follow up on previous tests (72106)

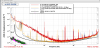

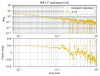

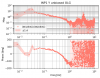

First I injected noise on SR2_M1_DAMP_P and SR2_M1_DAMP_L to measure the transfer function to SRCL. The result shows that the shape is different and the ratio is not constant in frequency. Therefore we probably can't cancel the coupling of SR2_DAMP_P to SRCL by rebalancing the driving matrix. Although I haven't thought carefully if there is some loop correction I need to do for those transfer functions. I measured and plotted the DAMP_*_OUT to SRCL_OUT. transfer functions. It might still be worth trying to change the P driving matrix while monitoring a P line to minimize the coupling to SRCL.

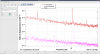

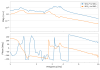

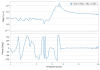

Then I reduced the damping gains for SR2 and SR3 even further. We are now running with SR2_M1_DAMP_*_GAIN = -0.1 (was -0.5 for all but P that was -0.2 since I reduced it yesterday). Also SR3_M1_DAMP_*_GAIN = -0.2 (was -1). This has improved a lot the SRCL motion and also improved DARM RMS. It looks like it also improved the range.

Tony has accepted this new configuration in SDF.

Detailed log below for future reference.

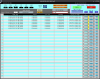

Time with SR2 P gain at -0.2 (but before that too)

from PDT: 2023-08-10 08:52:40.466492 PDT

UTC: 2023-08-10 15:52:40.466492 UTC

GPS: 1375717978.466492

to PDT: 2023-08-10 09:00:06.986101 PDT

UTC: 2023-08-10 16:00:06.986101 UTC

GPS: 1375718424.986101

H1:SUS-SR2_M1_DAMP_P_EXC butter("BandPass",4,1,10) ampl 2

from PDT: 2023-08-10 09:07:18.701326 PDT

UTC: 2023-08-10 16:07:18.701326 UTC

GPS: 1375718856.701326

to PDT: 2023-08-10 09:10:48.310499 PDT

UTC: 2023-08-10 16:10:48.310499 UTC

GPS: 1375719066.310499

H1:SUS-SR2_M1_DAMP_L_EXC butter("BandPass",4,1,10) ampl 0.2

from PDT: 2023-08-10 09:13:48.039178 PDT

UTC: 2023-08-10 16:13:48.039178 UTC

GPS: 1375719246.039178

to PDT: 2023-08-10 09:17:08.657970 PDT

UTC: 2023-08-10 16:17:08.657970 UTC

GPS: 1375719446.657970

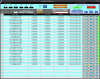

All SR2 damping at -0.2, all SR3 damping at -0.5

start PDT: 2023-08-10 09:31:47.701973 PDT

UTC: 2023-08-10 16:31:47.701973 UTC

GPS: 1375720325.701973

to PDT: 2023-08-10 09:37:34.801318 PDT

UTC: 2023-08-10 16:37:34.801318 UTC

GPS: 1375720672.801318

All SR2 damping at -0.2, all SR3 damping at -0.2

start PDT: 2023-08-10 09:38:42.830657 PDT

UTC: 2023-08-10 16:38:42.830657 UTC

GPS: 1375720740.830657

to PDT: 2023-08-10 09:43:58.578103 PDT

UTC: 2023-08-10 16:43:58.578103 UTC

GPS: 1375721056.578103

All SR2 damping at -0.1, all SR3 damping at -0.2

start PDT: 2023-08-10 09:45:38.009515 PDT

UTC: 2023-08-10 16:45:38.009515 UTC

GPS: 1375721156.009515