Sheila, Ryan S, Tony, Oli, Elenna, Camilla, TJ ...

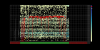

From Derek's analysis 86848, Sheila was suspicious that the ISS second loop was causing the glitches, see attached. The ISS turning on on a normal lock vs in this low-range glitchy lock attached, it's slightly more glitchy in this lock.

The IMC OLG was checked 86852.

Sheila and Ryan unlocked the ISS 2nd loop at 16:55UTC. This did not cause a lockloss although Shiela found that the IMC WFS sensors saw a shift in alignment which is unexpected.

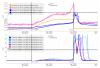

See the attached spectrum of IMC_WFS_A_DC_YAW and LSC_REFL_SERVO (identified in Derek's search), red is with the PSL 2nd loop ON, blue is off. There is no large differences so maybe the ISS 2nd loop isn't to blame.

We lost lock at 17:42UTC, unknown why but the buildups starting decreasing 3 minutes before the LL. It could have been from Sheila changing alignments of the IMC PZT.

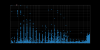

Sheila found IMC REFL DC channels are glitching whether the IMC is locked or unlocked, plot attached. But this seems to be the case even before the power outage.

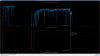

Once we unlocked, Oli out the IMC PZTs back to their location before the power outage, attached is what IMC cameras looks like locked at 2W after WFS converged for a few minutes.