We noticed that comparing the last two calibration reports that there has been a significant change in the systematic error, 87295. It's not immediately obvious what the cause of this is. Two current problems we are aware of: there is test mass charge, and kappa TST is up by more than 3%, and we lost another 1% optical gain since the power outage.

One possible source is the SRC detuning changing (not sure how this could happen, but it might change).

Today, I tried to correct some of these issues.

Correcting actuation:

This is pretty straightforward, I measured the DARM OLG and adjusted the L3 DRIVEALIGN gain to bring the UGF back t about 70 Hz, and Kappa TST back to 1. This required about a 3.5% reduction in the drivealign gain, from 88.285 to 85.21. I confirmed that this did the right thing by comparing the DARM OLG to an old reference and watching kappa TST. I updated the guardian with this new gain, SDFed, and changed the standard calibration pydarm ini file to have this new gain. I also remembered to update this gain in the CAL CS model.

Correcting sensing:

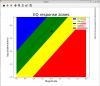

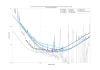

Next, Camilla took a sqz data set using FIS at different SRCL offsets, 87387. We did the usual 3 SRCL offset steps, but then we were confused by the results, so we added in a fourth at -450 ct. Part of this measurement requires us to guess how much SRCL detuning each measurement has, so we spent a bunch of time iterating to make our gwinc fit match the data in this plot. I'm still not sure we did it right, but it could also be something else wrong with the model. The linear fit suggests we need about a -435 ct offset. We changed the offset following this result.

Checking the result:

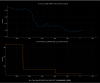

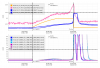

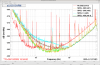

After these changes, Corey ran the usual calibration sweep. The broadband comparison showed some improvement in the calibration. However, the calibration report shows a significant spring in the sensing function. To compare how this looks relative to previous measurements, I plotted the last three sensing function measurements.

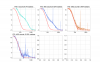

The calibration was still with 1% uncertainty on 9/27. On 10/4, the calibration uncertainty increased. Today, we changed the SRCL offset following our SQZ measurement. This plot compares those three times, and includes the digital SRCL offset engaged at the time. I also took the ratio of each measurement with the measurement from 9.27 to highlight the differences. It seems like the difference between the 9/27 and 10/4 calibration cannot be attributed much to a change in the sensing. And clearly, this new SRCL offset makes the sensing function have an even larger spring than before.

Therefore, I concluded that this was a bad change to the offset, and I reverted. Unfortunately, it's too late today to get a new measurement. Since we have changed parameters, we would need a calibration measurement before we could generate a new model to push. Hopefully we can get a good measurement this Saturday. Whatever has changed about the calibration, I don't think it's from the SRCL offset. Also, correcting the L3 actuation strength was useful, but it doesn't account for the discrepancy we are seeing.