jeffrey.kissel@LIGO.ORG - posted 17:34, Tuesday 04 February 2020 - last comment - 13:02, Monday 10 February 2020(54907)

2020-01-03 Calibration Model Uncertainty Budget Update: First Results Finally Complete, but they Don't Agree with Final Answer Cross Checks

J. Kissel

I've been working for about a month now on trying to assemble the uncertainty budget for what I hope will be the only O3B darm loop "calibration" model parameter set,

/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O3/H1/params/

modelparams_H1_20200103.py rev 9128

installed in to the online CAL-CS pipeline on 2019-01-13 (see LHO aLOG 54473, 54476).

I've reached a point where I'm stumped, because all of the hard work organizing and culminating the huge collection of measurements out there, and understanding/accounting for each measurements' idiosyncrasies, the "final answer" uncertainty budget does not agree with the "final answer" transfer function of PCAL/GDS. The level of disagreement is shown in the final attachment, 2020-02-04_H1_PCAL2GDS_BB_meas1262990871_on_2020-01-13_vs_model_uncertainty_for_2020-01-03.pdf.

I have a couple of options in order explore what's wrong from here on, but I fear that those explorations will take me in to deep rabbit holes of even more of a mess, and I'd rather show my progress thus far, and work with others to understand where I should best proceed from here.

Please note that:

- this budget disagrees statistically signficantly with measurement, so is likely wrong in some subtle way, it will change, and should not yet be used for astrophysical analysis,

- this is the uncertainty budget for only the reference time (at 2019-12-26) it does not cover all of O3B

- this budget is comprised of data taken only during times when the IFO was well thermalized, and it does not yet cover how "bad" the systematic error is a low frequency while the sensing function is evolving as it thermalizes.

- the collection data used to create the estimate of unknown systematic error does not include data beyond 2020-01-20 that is now available, though I don't expect this to significantly impact the results

- for a look at how much this estimate has changed, and/or what we're currently using for astrophysics, please see G1901479.

For a very long, detailed record of my process -- currently in text file, core dump, format -- see T2000006.

I attach a huge collection of plots in order to support from where this uncertainty budget comes. Eventually, when I figure out what's going on, these plots and the detailed notes will turn in to a review presentation in T2000006. But, for now, bear with me, because I think this still very raw snapshot will become important.

I attach them in "order of operation:"

[1] Creating the uncertainty on the model parameters themselves which come from,

[a] posterior distributions of an MCMC fit to the reference measurement for the sensing function, C

2019-12-26_H1_forreference_fmin20Hz_sensingFunction_MCMCresults.pdf

[b] posterior distributions of an MCMC fit to each stage of the actuation function, A_UIM, A_PUM, and A_TST, which form the total actuator, A

2019-04-03_H1_20200103Model_REF_UIM_actuationMeasurement.pdf

2019-04-03_H1_20200103Model_REF_PUM_actuationMeasurement.pdf

2019-04-03_H1_20200103Model_REF_TST_actuationMeasurement.pdf

[2] Assembling the large collection of data that will be used to find any residual, unknown systematic error in

[a] the frequency dependence of the model for C,

2020-02-04_GPR_Run_H1_C_allO3BMeas_collection_PCALSYSERR_nofsQcorr_MCMCfmin20Hz_GPRfmin20_lengthscaleZp35_withPCALXHFdata_meas2020-01-20_sensingFunction_referenceModel_vs_allMeasurements.pdf

[b] the frequency dependence of the models for A_UIM, A_PUM, and A_TST

2020-01-13_H1_2020-01-16_GPR_Run_PCALSYSERR_collection_UIM_actuationMeasurement_allresiduals_MCMCInput.pdf

2020-01-13_H1_2020-01-16_GPR_Run_PCALSYSERR_collection_PUM_actuationMeasurement_allresiduals_MCMCInput.pdf

2020-01-13_H1_2020-01-16_GPR_Run_PCALSYSERR_collection_TST_actuationMeasurement_allresiduals_MCMCInput.pdf

accounting for known time-dependent correction factors and the presence of known PCAL systematic error (in measurements prior to 2019-11-12)

[3] The preferred GPR fit to that processed collection of data in [2], for the estimate of the unknown, frequency dependent systematic error

[a] in C, and its associated uncertainty

2020-02-04_GPR_Run_H1_C_allO3BMeas_collection_PCALSYSERR_nofsQcorr_MCMCfmin20Hz_GPRfmin20_lengthscaleZp35_withPCALXHFdata_model2020-01-03_sensingFunction_GPR.pdf

[b] in A_UIM, A_PUM, and A_TST

2020-01-16_GPR_Run_PCALSYSERR_collection_H1_A_model2020-01-03_lastmeas2020-01-13_UIM_actuationMultiGPR_f7-250Hz_L0p1.pdf

2020-01-16_GPR_Run_PCALSYSERR_collection_H1_A_model2020-01-03_lastmeas2020-01-13_PUM_actuationMultiGPR_f7-500Hz_L0p2.pdf

2020-01-16_GPR_Run_PCALSYSERR_collection_H1_A_model2020-01-03_lastmeas2020-01-13_TST_actuationMultiGPR_f10-1000Hz_L0p5.pdf

[4] The "final answer" uncertainty budget computed for the reference time only, when measured TDCFs are either 1.0 for the scalars like \kappa_C, \kappa_UIM, etc. or the exactly the model value for f_cc.

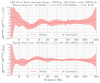

2020-02-04_O3_LHO_GPSTime_1261415486_ref_RelativeResponse1SigmaUncertainty.png

[5] The "final answer" uncertainty budget compared against the two versions of measurements which should be a cross-check and equivalent.

2020-02-04_H1_model20200103_deltaL_over_pcal.pdf

2020-02-04_H1_PCAL2GDS_BB_meas1262990871_on_2020-01-13_vs_model_uncertainty_for_2020-01-03.pdf

In the forthcoming comments below, I'll

- list the scripts that I wrote in order to create these plots.

- quote below the command line used in order to produce the uncertainty estimate.

- note the versions of the pyDARM universe that I committed in order to support the above scripts.

Images attached to this report

Non-image files attached to this report

Comments related to this report

Here's is the collection of scripts used to produce the above plots and analysis:

To create the sensing MCMC fit and posterior uncertainty distributions for the reference data set taken on 2019-12-26, with the 2020-01-03 model divided out of the input data,

/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O3/H1/Scripts/FullIFOSensingTFs/

process_sensingmeas_20191226_forreference.py rev 9099

To create the actuation MCMC fit and posterior uncertainty distributions for the reference data set taken on 2019-04-03, with the 2020-01-03 model divided out of the input data,

/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O3/H1/Scripts/FullIFOActuationTFs/

process_actuation_MCMC_model20200103_meas20190403_forreference.py rev 9160

To create the collection of data to be shoved in to GPR fitting to produce the unknown systematic error for the sensing function, over data from 2019-10-31 to 2020-01-20

(a) Run an MCMC fit over all 5-1100 Hz sweep data with

/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O3/H1/Scripts/FullIFOSensingTFs/

process_sensingmeas_MCMC_model20200103_all.sh rev 9246

which creates

/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O3/H1/Results/FullIFOSensingTFs/

2020-01-23_H1_20200103Model_fmin20Hz_PCALSYSERR_MCMC_TDCFs_list.txt rev 9251

(b) the results from which you must copy over to

/ligo/svncommon/CalSVN/aligocalibration/trunk/Common/pyDARM/src/

readHardcodedTDCFs_H1_O3B_20200109.py rev 9310

(c) and then ask Sudarshan to create all of the 1000-5000 Hz data from the roaming PCALX line -- where he uses methods on the cluster described in LHO aLOG 53911

(d) and then concatenate and process all the results, only after understanding how to account for the PCAL sysetmatic error that was present in data prior to 2019-11-12 when the PCAL group; see LHO aLOG 53188; with

/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O3/H1/Scripts/Uncertainty/

process_allmeas_writeGPRHDF5_meas20191031-20200120_withHF_PCALSYSERR_model20200103-C.py rev 9309

To create the collection of data to be shoved in to GPR fitting to produce the unknown systematic error for the actuation function (only AFTER Vlad had completed his opus on updating the ETMX suspension dynamical model, e.g. LHO aLOG 54519 and G2000037)

(a) Run an MCMC fit over all low

/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O3/H1/Scripts/FullIFOActuationTFs/

process_actuation_MCMC_model20200103_all.sh rev 9252

which creates

/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O3/H1/Results/FullIFOActuationTFs/

2020-01-15_H1_20200103Model_PCALSYSERR_MCMC_kappa_PUM_list.txt rev 9180

2020-01-15_H1_20200103Model_PCALSYSERR_MCMC_kappa_TST_list.txt rev 9180

2020-01-15_H1_20200103Model_PCALSYSERR_MCMC_kappa_UIM_list.txt rev 9180

(b) the results from which are copied over to

/ligo/svncommon/CalSVN/aligocalibration/trunk/Common/pyDARM/src/

readHardcodedTDCFs_H1_O3B_20200109.py rev 9310

(c) and then concatenate and process all the results, after again accounting to PCAL systematic error with

/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O3/H1/Scripts/Uncertainty/

process_allmeas_writeGPRHDF5_PCALSYSERR_model20200103-A.py rev 9196

The to assimilate all over the above individual uncertainties, call

/ligo/svncommon/CalSVN/aligocalibration/trunk/Common/pyDARM/

RRNom.py rev 9311

as

python3.5 /ligo/svncommon/CalSVN/aligocalibration/trunk/Common/pyDARM/RRNom.py --IFO=LHO --HDF5_A_MCMCresults=/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O3/H1/Results/Uncertainty/O3B_H1_A_MCMC_20200103Model_REF.hdf5 --HDF5_A_GPRresults=/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O3/H1/Results/Uncertainty/2020-01-16_GPR_Run_PCALSYSERR_collection_O3B_H1_A_model20200103_ALL_actuationMultiGPR_UIM_f7-250Hz_L0p1_PUM_f7-500Hz_L0p2_TST_f10-1000Hz_L0p5.hdf5 --HDF5_C_MCMCresults=/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O3/H1/Results/Uncertainty/O3_H1_C_MCMC_2019-12-26_forreference_fmin20Hz.hdf5 --HDF5_C_GPRresults=/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O3/H1/Results/Uncertainty/2020-02-04_GPR_Run_H1_C_allO3BMeas_collection_PCALSYSERR_nofsQcorr_MCMCfmin20Hz_GPRfmin20_lengthscaleZp35_withPCALXHFdata_model20200103_sensingFunction_GPR_posteriors.hdf5 --modelPath=/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O3/H1/params/ --modelFilename=modelparams_H1_20200103 --IFOmodel=modelPars --sampleNumber=1000 --seed=1111 --version=ref --outDir=/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O3/H1/Results/Uncertainty/ --plot1SigmaUncs --saveSummaries --gpsTime=1261415486

and then to compare that uncertainty with measured PCAL injections through the calibrated data streams CAL-DELTAL_EXTERNAL and GDS-CALIB_STRAIN, run

/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O3/H1/Scripts/CALCS_FE/

process_broadband_pcal2darmtf_collection_20200204.py rev 9319.

/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O3/H1/Scripts/FullIFOSensingTFs/

plot_GDS_BB_vs_Uncertainty_20200204.py rev 9321.

Finally, I've made oodles of modifications to the pyDARM universe in order to be able to analyze and understand all of this data, so it's important that I convey that the latest versions of the pyDARM universe I used to run all of these analysis is here:

/ligo/svncommon/CalSVN/aligocalibration/trunk/Common/pyDARM/src/

computeDARM.py last changed rev 8399

sensing.py last changed rev 9306

actuation.py last changed rev 9195

Good luck, future me, in recreating this all. Also -- again -- there are MANY more words in at least the first three versions of T2000006, so I encourage you to look there too.

I sent the following punchlist to the calibration mailing list, but it may be easier for some to find here:

Here?re my remaining problems with the budget:

(1) It is only informed by thermalized sweep data. In order to proceed beyond that, we need

(a) Evan (or me) to process the comb of calibration lines we injected during a thermalization back in Dec 2019

(b) The Kenyon team?s estimate of ?if we use the GDS computed, measured value of f_s from the calibration lines to *simulate* the change in sensing function during thermalization, how bad is the response function systematic error?"

(2) As always, I don?t like how we?re compensating the sweep data for time dependence, but this is a long term project ? cause I?m confident I have no other way to do it.

(3) There?s still a huge amount of systematic error in the UIM model, but I think where the error gets large in frequency, the UIM contribution has become small.

(4) I?m reasonably confident in my choices for length scale and fit frequency range of the GPR fitting, but it still irks me that it relies on my warm and fuzzy feeling, and or my exhaustion level to claim ?good enough.?

(a) more long term tasks, but the Oregon Team and the Monash ?Team? [Ethan] are toying around with better kernels that might make more sense for the kind of data we have.

(b) I?m still using my gut to establish the bounds on the low frequency end of the sensing function at 20 Hz. I?ve got pending GPR .hdf5 files that I can use to show the difference in impact of using lower limits of 5, 12, 14, and 17 as well as 20, just to see that how the ?underestimate? would impact the response function.

(5) I *think* I understand how to correct the collection of sweeps prior to 2019-11-12 for the 1.0043 PCALY systematic error, but I?d like some other folks to think about it to make sure my understanding is correct. However, I?m suspicious of how the application actually *increases* the spread of measurements, even after accounting for TDCFs.

(a) I?ve got pending GPR .hdf5 files of GPR fits over data that (incorrectly) *don?t* account for the PCAL systematic error, but these will probably just be used for demonstration

(6) Sudarshan and I quickly walked through how the PCAL systematic error impacts the 1000-5000 Hz sensing function data he provides, but it also needs a review.

(7) I tried my best to review RRnom.py to make sure it is NOT applying any TDCFs, because I?ve requested the budget be made at a ?version=ref, reference time, but that code has so many messy if / else conditionals, hard coded bandaids, and other hacks that it?s really tough to follow, so I might have missed something.

(8) I *know* the the processing of DELTAL_EXTERNAL sweeps and broadband injections does not account for TDCFS, but it will be a lot of work modifying the processing script and/or computeDARM to be able to apply TDCFs to the model (and/or to just create ?hack? parameter sets that have the TDCFs for a given time).

(9) At least to me, there are still mysteries surrounding the processing of the GDS-CALIB_STRAIN. As I understand it thus far, during the broad band injections, there are no calibration lines on -- so, the TDCFs applied are the ?last good value? prior to the broad band injection. I?d like to understand better how / why / if we should correct for GDS produced TDCFs during these broadband injections.

Here?s my ranking of what I think could be causing the discrepancy:

It?s probably something to do with (5) through (8).

(1) is just not covered by any data or analysis, so it?s just not going to be exposed, nor play a role in the problems we see.

(2) this may be some of the issue, but I think it results in ?fattening? the uncertainty on the GPR, not causing tangible differences systematic error

(3) is no longer important

(4) I think only matters for the fringes, but it *may* be a problem with the PUM stage, which has the most systematic error and the largest \kappa_PUM.

This comment reviews the work done in order to settle on the GPR fitting parameters used for the uncertainty in unknown systematic error of the 2020-01-03 model -- those fit parameters being the frequency range over which the fit is allowed, fmin, and fmax, and the radial-basis-function length scale, l. For the final answers in the .hdf5 files called out in the RRnom.py call in the above comment LHO aLOG 54909, I have chosen the following: TABLE 1 fmin [Hz] fmax [Hz] RBF length scale "name" and (actual, prior) C 20 5000 "0.35" (0.33, 0.37) UIM 7 250 "0.1" (0.08, 0.12) PUM 7 500 "0.2" (0.18, 0.22) TST 10 1000 "0.5" (0.48, 0.52) Without varying the underlying data set, *for* each data set, I varied these parameters around (fmin, fmax, and length scale) until I felt warm and fuzzy. Unfortunately, yes, you read that right, the input parameters (a) result in such wildly differently answers depending on what's chosen and that choice, in truth, (b) relies on my human feelings in order to establish the final set of answers used and it not quantitatively deterministic. Note, that these parameters have *changed* from what was been used in the O3A uncertainty assessment. The parameters formerly used were TABLE 2 fmin [Hz] fmax [Hz] RBF length scale "name" and (actual, prior) C 12 5000 "0.5" (0.48, 0.52) UIM 6 50 "1.0" (0.5, 1.5) PUM 6 400 "0.3" (0.1, 0.5) TST 6 1000 "0.3" (0.1, 0.5) I've settled on the new values in TABLE 1, based on *my impressions* of goodness of fit. For the frequency range, I assessed when I thought that the data was actually representative of good, clear, well-stacked, measurements of the same thing. More details in the following enumerated points: (1) At low frequency for the actuator data, I increased the fmin frequency because we now know that data in the 5-10 Hz region is where the data is still quite influenced by the noise in the detector, and non-stationary in the detector. As such -- even when using data with high coherence, the transfer function estimate is not consistent between measurement to measurement. (2) At low frequency in the sensing function data, we are still limited by our understanding of what's going on (is it SRC detuning? is it L2A2L? is it both? is it something else entirely?). So I played around with fmin until I was confident that the 68% confidence interval was covering a lot of the data. This is arguably the most arbitrary of choices I made. (3) For the fmax's of the actuator -- because of Vlad's hard work on improving the dynamical model of the suspension. I believe we can now use "much" more of the UIM and PUM data. The estimated systematic error above these frequencies is still artificially and incorrectly capped at 30%, but now with our better understanding of where this systematic error contributes, I'm confident that above these frequencies -- even the *data* residuals [which are a *much* better representation of the *actual* systematic error] -- are below a safe threshold of impact on the response function. (4) For the length scales of the RBF for the actuator, I wanted much tighter priors, such that I had better control over what the GPR ended up using for a length scale. Also, a length scale of 1.0 is just far too unphysically large for the UIM data that we are now using, which has features that in the ~50-200 Hz region that *require* a short length scale for the GPR to resolve. Recall from page 36 of G1901479, that the frequency distance of correlation, df, is related to dimensionless length scale as df = -/+ f (10^{+/- dl} - 1) so a length scale of 0.5 corresponds to a frequency correlation bound between 70-200 Hz at 100 Hz, where as a length scale of 0.07 corresponds to a frequency correlation bound between 15-17 Hz at 100 Hz. Given that (a) with our current kernel, we have to choose one-and-only-one length scale over the entire frequency range, and (b) we're looking for a mix of sharp features and single, spread appart, pole / zero frequency errors, I used the TABLE 1 numbers and my gut to tell me what felt right. I demonstrate the above exploration with the following collections of plots for the UIM and the sensing function, C -- since these are our most sophisticated transfer function residuals. For the UIM, I show /ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O3/H1/Results/Uncertainty/ 2020-01-11_GPR_Run_H1_A_model2020-01-03_lastmeas2020-01-03_UIM_actuationMultiGPR_gprparamstudy.pdf in which I varied both the fit range and the length scale. Page 1 shows what the reported systematic error would be if we retained the O3A GPR fit parameters. You can immediately see that the fit is dreadfully ignoring the systematic error above 50 Hz, all in attempt to get the median of the fit to smoothly align with the data between 6 and 50 Hz. The data is reporting as much as a 40% at 150 Hz that is just *not at all* accurately represented by the fit, and thus, in a region where it impacts the response function, we are drastically under reporting our systematic error. Page 2 and 3, with length scales from 0.2 and 0.5, respectively, and wide open frequency range to fit, show that the current kernel just is not capable of handling the diverse length scales -- types of frequency correlations -- that is present in the data. Pages 4-5 show a similar study comparing 0.5 and 0.5 length scales, but now with a tight 10-100 Hz fit range. Pages 6-11 show a wide variety of length scales from "too short" to "too long" over the settled upon frequency range of 7 to 250. For the sensing function I show the "matrix" of study, with three files, one at length scale 0.2, 0.35, and 0.5. /ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O3/H1/Results/Uncertainty/ 2020-01-03_H1_model2020-01-03_allO3BMeas_nofsQcorr_MCMCfmin20Hz_GPRfmincollection_lengthscaleZp2_withPCALXHFdata_sensingFunction_GPR.pdf 2020-01-03_H1_model2020-01-03_allO3BMeas_nofsQcorr_MCMCfmin20Hz_GPRfmincollection_lengthscaleZp35_withPCALXHFdata_sensingFunction_GPR.pdf 2020-01-03_H1_model2020-01-03_allO3BMeas_nofsQcorr_MCMCfmin20Hz_GPRfmincollection_lengthscaleZp5_withPCALXHFdata_sensingFunction_GPR.pdf Again, this is *definitely* the most arbitrary of decisions, but my gut was guided by (1) The amount of coverage that seems reasonable for both the sub-20 Hz low-frequency nonsense in magnitude and phase, as well as > 1000 Hz. (2) The amount of clearly artificial "standing wave like wiggle" in the 50 to 500 Hz band. We shall see! I have *not* made response function uncertainty budgets for each of these different instantiations, but remember that you can use Evan's Follow-up Slides in G1901479 (the O3A C00 uncertainty review) in order to get a feel for the impact of at least the sensing function. An uncertainty in unknown systematic error in the sensing function -- for the O3 A model -- at the level of +/- 12% / 8 deg at 20 Hz will start to negatively impact the response function uncertainty. You'll notice that, with the fmin set to 20 Hz, for any length length scale -- 0.2, 0.35, or 0.5 -- the sensing function systematic error uncertainty *does not exceed* +/- 5% / 2 deg at 20 Hz. Conversely, if the length scale is fixed at 0.35, even a *very* conservative uncertainty envelope for the sensing function systematic error that results from an fmin of 30 Hz, the systematic error uncertainty does not exceed +/- 9% / 5 deg at 20 Hz. Good! If someone wants to perform the comparison of response function uncertainty under the various fmin's used for a length scale of 0.35 Hz, then you can use the RRnom command from the above comment LHO aLOG 54909, but substitute the sensing function GPR .hdf5 file with the following: /ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O3/H1/Results/Uncertainty/ 2020-02-04_GPR_Run_H1_C_allO3BMeas_collection_PCALSYSERR_nofsQcorr_MCMCfmin20Hz_GPRfmin5_lengthscaleZp35_withPCALXHFdata_model20200103_sensingFunction_GPR_posteriors.hdf5 2020-02-04_GPR_Run_H1_C_allO3BMeas_collection_PCALSYSERR_nofsQcorr_MCMCfmin20Hz_GPRfmin12_lengthscaleZp35_withPCALXHFdata_model20200103_sensingFunction_GPR_posteriors.hdf5 2020-02-04_GPR_Run_H1_C_allO3BMeas_collection_PCALSYSERR_nofsQcorr_MCMCfmin20Hz_GPRfmin14_lengthscaleZp35_withPCALXHFdata_model20200103_sensingFunction_GPR_posteriors.hdf5 2020-02-04_GPR_Run_H1_C_allO3BMeas_collection_PCALSYSERR_nofsQcorr_MCMCfmin20Hz_GPRfmin17_lengthscaleZp35_withPCALXHFdata_model20200103_sensingFunction_GPR_posteriors.hdf5 2020-02-04_GPR_Run_H1_C_allO3BMeas_collection_PCALSYSERR_nofsQcorr_MCMCfmin20Hz_GPRfmin20_lengthscaleZp35_withPCALXHFdata_model20200103_sensingFunction_GPR_posteriors.hdf5 though, I'll note that the data used for these .hdf5 sets contains all the 2019-10-31 through 2020-01-20 measurements processed with an *attempt* to correct for PCAL systematic error, which we currently believe is flawed.

Non-image files attached to this comment