(Travis S., Gerardo M.)

Pumpdown was started this morning, sorry no blowdown data. By the time I remembered to take the reading there was no more pressure inside the chamber. Travis connected all of the equipment while I wrestled with the viewport inspection.

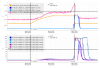

Pumpdown was started without an issue. We started with the scroll pump from the super sucker 500, the scroll pump of the SS500 cart brought the pressure down to 3.7x10-01 Torr, then we switched the cart to start pumping with the turbo pump/scroll pump. We had one trip of the system, usually due to a time out, but it only took a restart of the turbo pump and the pumpdown to continue.

Unfortunately the pumpdown had to be stopped at 8:00 PM local time, with a pressure of 4.0x10-05 Torr, at that pressure the set points had not activated on the SS500 cart controller, the set points are hard set and can't be modified from the SS500 controller screen.

Pumpdown will be restarted tomorrow morning.

Note, on the attachment, regarding the screens, no set points for channel 1.