Summary:

This post provides an estimate of the thermal transition caused by changing the JAC input power from 0 W to 100 W. The result will be used for the design of the JAC heater.

The main conclusion is :Changing the JAC input power from 0 W to 100 W results in a cavity length change of ≈ 0.2 µm (corresponding to ≈ 100 V PZT actuation), which remains within the PZT range. No heater-based compensation is required.

Details:

Two possible types of state change are considered. One is the effect in which light scattered from the mirrors is absorbed by the cavity body, heating it and causing the entire cavity to expand. This is expected to have a long transition time. The other is expansion of the mirrors themselves due to absorption, which shortens the cavity length and is expected to have a relatively short time constant.

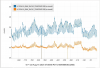

The attached plot shows one hour of trend data starting from 2024-11-14 19:30 UTC. From top to bottom, the traces are: PMC transmitted power, PMC temperature from the thermistor, heater input, and PZT input.

This lock occurred after the PMC had been unlocked for about three hours, so it represents the transition from a cold state to a hot state. Just before locking, the PMC temperature was about 307.0 K, and immediately after locking, the PZT input voltage was 370 V.

Although the heater input changes appear to cause rapid changes in the PMC temperature, these are not reliable indicators of the actual body temperature change. The observed change is about 0.4 K, but given aluminum’s thermal expansion coefficient of 10^{-5} m/K, a 0.1 K change would shift the cavity length by one FSR, which would exceed the PZT range and cause lock loss. This suggests that the rapid apparent temperature changes are due to the thermistor picking up radiation from the heater or sensing the local temperature near the heater, rather than the true body temperature.

Therefore, to evaluate the body temperature change, we need to disregard these fast variations and look at the average thermistor signal. This shows that the change is less than 0.1 K over one hour, with the highest temperature occurring immediately after lock. This indicates that the observed temperature change is due to the heater input change, and that the body expansion can be neglected.

The important point is that although the temperature just after lock and at t = 3500s is almost the same, the PZT input voltage changes by about 100 V. This is interpreted as a change in the mirror state. A 100 V change corresponds to a cavity length change of about 0.2 µm, which can be attributed to the change in input power.

Old 18 bit DAC removed SN: 110425-48

New 18 bit DAC card installed SN:110425-32

FRS35077

Everyone keeps asking so I figure it might as well be in the ALOG. Be aware, there is a big spider at EX, we think it is a big wolf spider. I estimate the size to be 5" or 13cm leg to leg. Its body seemed to be 3" length and 1" in diameter at its widest. Here are the pictures.