TITLE: 06/05 Eve Shift: 2330-0500 UTC (1630-2200 PST), all times posted in UTC

STATE of H1: Planned Engineering

INCOMING OPERATOR: None

SHIFT SUMMARY:

Just put the IFO in DOWN for the night after losing lock from ENGAGE_ASC_FOR_FULL_IFO. I was having trouble all evening getting DRMI to stay locked - we kept losing lock from the 16.2 Hz oscillation that Craig and Elenna had found yesterday (84769). I have no idea why it's only been appearing in the evenings - yesterday evening we had these issues, then this morning no issues at all, and then this evening issues again!! I would lose lock from it anytime after ENGAGE_DRMI_ASC, but even just in DRMI_LOCKED_PREP_ASC, the oscillation was there, just not ringing up.

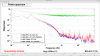

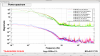

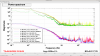

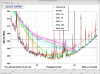

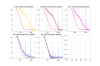

One of the times I was able to get DRMI to lock, I stayed in DRMI_LOCKED_PREP_ASC and took MICH, PRCL, and SRCL OLG measurements. Later on, Sheila called and figured out it must be due to the MICH gain being too high, so I lowered MICH2 gain, originally at 1, by 30% to 0.7. After doing that, the 16 Hz oscillation went away (diaggui) and we were able to get through DRMI. Sheila said we probably needed that gain back to 1 for DRMI_TO_POP, so I paused at RESONANCE, reset the MICH2 gain to 1, and then continued. We lost lock during ENGAGE_ASC_FOR_FULL_IFO.

So the MICH2 gain is currently at its original gain of 1.

Evening task progress for today:

- SRCL offset check FDS + range DONE

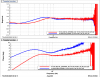

- Try new LSC FF DONE 84807

- Run CAL sweep PARTIALLY DONE 84808

- Close ISS 2nd loop

- Try LASER_NOISE_SUPPRESSION

- OMC alignment offsets

- IMC injection (overnight) IN PROG RUNNING SOOOOON

LOG:

23:30 Sitting in NOMINAL_LOW_NOISE while tests are being run

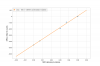

00:32 Started BB calibration measurement

00:37 BB measurement done

00:38 Lockloss due to earthquake

- started a manual initial alignment

- tried to leave SCAN_ALIGNMENT for UNLOCKED and got a Guardian node error about the time being in the past - had to manual before reloading to get out of it (txt)

- After the initial alignment was done, I relocked SRY and left it there for a while while Vicky and Kevin worked on SQZ stuff

02:24 Lockloss from ENGAGE_DRMI_ASC due to the 16.2 Hz oscillation (84769)

02:37 Lockloss from TURN_ON_BS_STAGE2 due to the 16.2 Hz oscillation

- I paused in DRMI_LOCKED_PREP_ASC and adjusted SRM to make POP look better, then went into ENGAGED_DRMI_ASC. We had an oscillatoni start, but we were able to survive it. Then I went into TURN_ON_BS_STAGE2 and we immediately lost lock

03:24 Lockloss from ACQUIRE_DRMI_1F while trying to acquire. We had gotten it earlier and I stayed in DRMI_LOCKED_PREP_ASC and took MICH, PRCL, and SRCL OLG measurements, but we lost DRMI when I aborted the SRCL measurement and we weren't able to grab it again

03:40 Lockloss from ACQUIRE_DRMI_1F

- Ran another initial alignment

04:20 Lockloss from TURN_ON_BS_STAGE2 due to the 16.2 Hz oscillation

04:31 Lockloss from ENGAGE_DRMI_ASC due to the 16.2 Hz oscillation

- Sheila called, I lowered MICH2 gain (originally at 1) by 30% to 0.7, then the 16 Hz oscillation went away and we were able to get through DRMI

- Paused at RESONANCE, reset the MICH2 gain to 1, and then continued

04:58 Lockloss from ENGAGE_ASC_FOR_FULL_IFO

| Start Time |

System |

Name |

Location |

Lazer_Haz |

Task |

Time End |

| 00:44 |

VAC |

Gerardo |

LVEA |

YES |

Moving a ladder by HAM4 |

01:21 |