Sheila has been driving us through ENGAGE_ASC_FOR_FULL_IFO, TMS_SERVO, and ENGAGE_SOFT_LOOPS. She closed all of the WFS-based ASC loops by hand, with CHARD at reduced gain. We are sitting in at an alignment which is not the best buildups we've seen so far, but is stable. The CHARD P sensing matrix is now REFL A 45 I = 1, and REFL B 45 I = 0.5. Green references have been reset to our current alignment to hopefully make ENGAGE_ASC_FOR_FULL_IFO work automatically next time we acquire, without yanking the IFO out of lock. Screenshot of the references posted. Now engaging ADS loops. This cleaned up the buildups for us a bit. Now resetting the green references again. Now turned on the transmon servos, which made a big difference in the green references. So we are resetting the green references again again. Now turned on all SOFT loops, reduced gain for PRM Pitch (ADS PIT 3). Now resetting green references again again again.

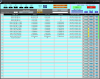

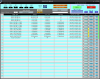

In the last lock, we were able to get to DC readout, and Georgia and Julia worked on some violin damping. Several ASC settings were different from the guardian:

- CHARD Y input matrix -1*REFLA 45 + 1 * REFL B 45I, CHARD P input matrix 1* REFL A 45 I + 0.5 * REFL B 45 I (these should have been multiplied by -1, so that the filter bank gain gain can be positive, they are in guardian that way).

- ADS PIT3 gain lower by 10dB compared to normal (turned off FM7)

- CHARD Y gain would be 17.5, it is 3 instead and started to ring at 10. I ran a OLG template which had poor coherence, screenshot attached.

- CHARD P gain would be a gain of 1.7 with +30dB of gain, instead I used a gain of 20.

- PRC2 loops have their nominal gain of 250, but the + 10dB filters are off. I tried to step the PRC2 P gain upwards, 500 was fine but we lost lock when I tried 1000.

These last three are new input matrix values, so this makes sense.