J. Kissel

I've created analysis scripts that take pre-measured DTT transfer functions of the OFI suspension and compares them against the model and previous measurements, similar to what's in place for all other suspension types. The new scripts live here:

/ligo/svncommon/SusSVN/sus/trunk/OFIS/Common/MatlabTools/

plotOFIS_dtttfs_M1.m << processes single DTT transfer function into a standard format, shows cross-coupling and EUL drived to OSEM sensed transfer functions

plotallofis_tfs_M1.m << compares as many measurements as possible against a standard model

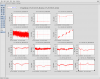

The resulting plots are attached.

In summary -- still plenty of work to do to understand the actuated OFI!

Measurement Details:

The set transfer functions shown, 2017-10-13 (from LHO aLOG 39033), are with the eddy current damping magnets completely backed off, and the drive-chain is using that of OM1 (a 20 Vpp / 16 bit DAC, a HAM-A driver, AOSEMs for coils, and 3 DIA x 6 LEN [mm] magnets), and the sensing chain is as designed (AOSEM, US SatAmp, 16 bit / 40 Vpp ADC).

Calibration Details:

You'll note that the scale factors for the L-to-L and Y-to-Y measured transfer functions don't match the model. I think that the T-to-T TF matching the model is a coincidence. I'm confident I don't yet understand the electronics chain. I'm confident it's something in the electronics because the scale factor is the same above and below the resonance for each of the flawed model DOFs (and I've manipulated dynamical parameters and I have to change the parameters to non-sensical values to even come close to "fixing" the problem which doesn't help).

Here's what I do know:

(1) I have installed calibration filters into the OSEMINF banks of the OFI's sensing chain. These filters have the same gain we've been using for every OSEM since the beginning of time: 0.02333 [um/ct]. While this isn't necessarily accurate, given

(a) the use of the US satellite amp, which has a different transimpedance than the UK sat-amps (US = 150e3, UK = 121e3 [V/A]), and

(b) the UK sat-amps transimpedance gain has changed from 240e3 to 121e3 [V/A] since that number was originally calculated

so, we'll at most gain a factor of (240 / 150) = 1.6 with that correction, BUT -- that should be for all DOFs, so that screws up the T-to-T TFs.

(2) I *didn't,* at the time, have the individual sensors normalized to a "perfect" OSEM with open-light-current of 30000 [ct]. But, having just installed them now, this is at most a ~10% gain discrepancy between sensors.

(3) Though we don't yet have the complete calculation of the force coefficient of the AOSEM coil + 3x6 [mm] magnet combo a. la. T1000164, the only thing not-modeled is the slightly larger radius of the magnet. Thus, we can -- to-first-order -- scale the strength by the change in volume of the magnet as described on pg 4 of G1701519, hence I've used

forceCoeff_2x6 = 0.0309; % [N/A]; T1000164, T1400030, etc.

forceCoeff_3x6 = (3.0/2)^2 * forceCoeff_2x6; % [N/A]; G1701519 pg 4

forceCoeff_3x6

= 0.069525 [N/A]

(4) I'm *assuming* that OM1's HAM-A coil driver is using -v3 of the circuit, in which the output impedance is 1.2k (because of ECR E1201027), so the transconductance gain is 0.988 [mA/V], but I'm only 90% confident. It might be a -v2, and thus be 9.6 [mA/V], but then the data for the *actual* OM1 acceptance wouldn't match so well (e.g. see LHO aLOG 38260).

Model Details

This model matches the frequency and Q of the measured data quite well. I've tweaked the original model's parameter set (see LHO aLOG 12589) to better match the data. The following table describes the differences (remember, x = L, y = T, z = V):

Param ofisopt_damp ofisopt_h1susofi difference percent diff why change?

Unused global Eddy Current Damping Coefficient

'bd' [ 0.10898] [ 0.1] [ -0.0089822] '-8.24%' (just to see if the parameter does anything; it doesn't.)

Moments of Inertia

'I0x' [ 0.43968] [ 0.475] [ 0.035318] '8.03%' to move the yaw mode cross-coupling to match in the L-to-L TF

'I0y' [ 0.06499] [ 0.065] [ 9.676e-06] '0.0149%' (just rounded)

'I0z' [ 0.47101] [ 0.55] [ 0.078985] '16.8%' to lower the yaw mode frequency to match the Y-to-Y TF -- though many things can be manipulated to get this "right"

'I0xy' [0.00019696] [ 0.0002] [ 3.035e-06] '1.54%' (just rounded)

'I0yz' [-0.0066028] [-0.0066] [ 2.815e-06] '-0.0426%' (just rounded)

'I0zx' [ 0.002156] [ 0.002] [-0.00015597] '-7.23%' (just rounded)

Eddy Current damping Coefficients

'bx0' [ 5.0837] [ 3.5] [ -1.5837] '-31.2%' reduced to increase the Q to match the backed-off ECDs

'by0' [ 3.7] [ 2.5] [ -1.2] '-32.4%' reduced to increase the Q to match the backed-off ECDs

'bz0' [ 6.975] [ 7] [ 0.024974] '0.358%' (just to see if parameter affects TFs in question; they don't)

'byaw0' [ 0.47101] [ 0.1] [ -0.37101] '-78.8%' reduced to increase the Q to match the backed-off ECDs

'bpitch0' [ 0.06499] [ 0.06] [ -0.0049903] '-7.68%' (just to see if parameter affects TFs in question; they don't)

'broll0' [ 0.43968] [ 0.4] [ -0.039682] '-9.03%' (just to see if parameter affects TFs in question; they don't)

All other parameters (those based on physical dimensions) I've left as is.

Further -- it's still puzzling why the yaw frequency is so high. Original measurements gave a yaw frequency of ~0.4 Hz T1000109, yet both Mark and I show a measured resonance of ~1.039 Hz.