Brian, Oli, Edgard

Testing the implementation of the OSEM estimator installed on SR3. The models were installed on Monday. We don't have the model transfer functions yet. We were testing the basic OSEM path and the switch. We were testing SR3 YAW. It all looks good so far - the OSEM signal is getting to where it should be, and fader-switch is working correctly.

-- detailed testing notes --

ISI is damped. vacuum is still pumping pressure is ~16 torr. Looks like the suspension is still moving because of temperature changes so we can't do useful TFs yet.

GPS time 14289 57082

Look at the drive level of the classic damper, the pk-pk with normal damping is about -0.04 to +0.04

channels all start with H1:SUS-SR3_

Look at channels: M1_YAW_DAMP_EST_OUTPUT, M1_YAM_DAMP_OSEM_OUTPUT, M1_YAW_DAMP_SIGMON, M1_DAMP_Y_OUTPUT, M1_DAMP_Y_IN1

Y osem damper gain is -0.5 instead of -1.0. Gabriele changed this.

Test 1 - are the OSEM signals getting to the OSEM path, and is the damper control set correctly? - YES!

Set the YAW_DAMP_OSEM filter bank to be the same at the DAMP_Y bank

The output switch for the estimator is OFF - so the OUTPUT signal from the YAW_DAMP_OSEM filter does not get to the osem drives

Output signals of the 2 damping controllers should be the same - and they do look the same. Put them on top of each other and they seem identical

Test 2 - use the classic damping and the YAW_OSEM path, does it work? - Yes!

1. set the YAM_DAMP_OSEM gain to -0.4 (from -0.5)

2. set the classic damping gain to -0.1 (from -0.5) (so 20% of the gain is in the classic path, 80% in the estimator path)

gps time ~...58716, Turn on the estimator. This should recover the previous normal damping.

Switch looks smooth - no glitching, no drama. comes on well

The drive levels look like 4 to 1 by eye (correct). total drive looks about the same as before.

Turn off estim path at ...58900 ish. OSEM input signal doesn't look any different (gah, really?!, of course not, it's all sensor noise)

Test 2 successful - switch seems smooth, damping paths look good

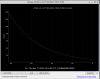

Test 3 - test the switch between OSEM path and Estimator path - is it smooth and well behaved? - YES!

Set Estim damping control to be the same as the OSEM damping control.

Set the OSEM Bandpass to 1 so OSEM_DAMPER and ESTIM_DAMPER inputs should match (they do) and the outputs should match (they do).

these three signals should all be the same now during the switch from OSEM to Estim

M1_YAW_DAMP_SIGMON (this is the switch output)

M1_YAW_DAMP_OSEM_OUTPUT (first input)

M1_YAW_DAMP_EST_OUTPUT (second input)

start around 59720 -

then switch back and forth several times - these signals all stay on top of each other and are indistinguishable.

GPS is about ...60100

Zoom in for a close look at the start and stop of the fade to look for glitching - I don't see any, see plot in comment.

Test3 - success - by eye we can no see any difference in the 3 channels.