Shifter: Beverly Berger

LHO fellow: Evan Goetz

For full results see here.

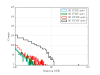

- DQ shift duty cycle: (52.4% + 57.8% + 96.0%)/3 = 68.7% with BNS range 65 - 70 Mpc.

- Most Summary Page data was missing on 1 May and Omicron triggers were missing on 2 May and some data updated only for part of the day. Everything appeared to work on 3 May.

- The ETMX oplev was seen to glitch whenever that data was available. However, when hVeto was also available, the ETMX oplev did not dominate glitching as strongly as ETMY had done previously. However, it was the Round 1 winning channel for the 16-hour hVeto on 3 May and Round 3 for the 24-hour run. The ETMY oplev was well behaved during this shift.

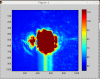

- A peculiar wandering band (see alog 35981) appeared on 2 May, evolving from about 500 Hz to 2 kHz (with a 2nd harmonic up to 4 kHz). The origin is unknown and does not appear to be MIC channels.

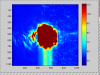

- On 3 May, a seismic noise spike appeared at about 5:50 UTC in the earthquake band and at about 10 - 30 Hz in h(t). The apparent earthquake was not visible in any other seismic band and was seen in all the usual places at earthquake band frequencies. The mechanism of upconversion is not clear although the bounce and roll,mode amplitudes spiked at the time of the h(t) noise spike. The winning channel for the noise spike was the round 1 hVeto winner H1:ASC-REFL_B_RF9_I_YAW_OUT_DQ although many other alignment channels have comparable significance.

- Evan heard an airplane fly over the control room on 2 May. The event appeared in h(t) and was recorded by PlaneMon.

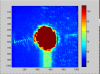

- Evan performed a Bruco scan to try to understand the band of low SNR 50 Hz glitches that first appeared on 8 April (see alog 36006). There was no bivouac correlation. It should be noticed that the PSL table spectrogram shows noise features at about 50 Hz. The h(t) glitches are absent on 4 May.

- We point out that the 12:00 UTC range drop due on Monday through Thursday due to truck traffic on route 10 is accompanied by a similar drop at 0:00 UTC. Both features shift by + 1 h for PST. The 0:00 UTC occurrence must be the trucks returning from work. The coupling in this case to h(t) at 50 - 100 Hz may be weaker. Note that Robert has speculated that this upconversion (and others) are due to scattering from the Swiss cheese baffle (see alog 35735).