Fil, Richard, Nutsinee

Quick conclusion: The camera and the pick-off beam splitter is in place, but not aligned.

Details: First I swapped the camera fiber cable back so h1hwsmsr can stream images from HWSX camera while I install the BS.

While looking at the stream images, I positioned the BS in such a way that doesn't cause a significant change to the stream images (I didn't take the HW plate off).

Then I installed the camera (screwed on to the table). Because the gate valves were close for the Pcal camera installation, I didn't have the green light to do the alignment.

Richard got the network streaming to work. Now we can look at what the GigE sees though CDS >> Digital Video Cameras. There's nothing there.

The alignment will have to wait until a next opportunity now that green and the IR are back (LVEA is still laser safe).

The camera is left powered on, connected to the Ethernet cable, and CCD cap off.

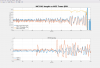

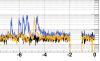

I re-ran the python script, retook the reference centroids. From 1175379652 GPS time the data written to /data/H1/ITMX_HWS comes from HWSX camera.

Specification

Camera: BASLER scA1400-17gc

Beam Splitter: Thorlabs BSN17 2"diameter 90:10 UVFS BS Plate, 700-110nm t=8mm