WP6548 Remove MY compressed air channels from cell phone alarm system

Bubba, Chandra, Dave:

Following the stand-down of the MY air compressor this afternoon, its channels were removed from the cell phone alarm system and the code was restarted.

WP6548 Remove MY compressed air channels from cell phone alarm system

Bubba, Chandra, Dave:

Following the stand-down of the MY air compressor this afternoon, its channels were removed from the cell phone alarm system and the code was restarted.

TITLE: 03/30 Eve Shift: 23:00-07:00 UTC (16:00-00:00 PST), all times posted in UTC

STATE of H1: Observing at 65Mpc

OUTGOING OPERATOR: Ed

CURRENT ENVIRONMENT:

Wind: 17mph Gusts, 12mph 5min avg

Primary useism: 0.08 μm/s

Secondary useism: 0.22 μm/s

QUICK SUMMARY: Currently on a GRB stand down. Our range has been trending down for the last ten hours, not sure why yet.

TITLE: 03/30 Day Shift: 15:00-23:00 UTC (08:00-16:00 PST), all times posted in UTC

STATE of H1: Observing at 50Mpc

INCOMING OPERATOR: Nutsinee

SHIFT SUMMARY:

Fairly quiet day. a2l was run once. It need to be run again at next opportunity. Some NOISEMON injections were run by Evan and Miriam while Livingston was down. A flurry of small earthquakes late in te shift posed no real threat to our lock. Handing off to TJ

LOG:

15:08 H1 out of Observing for Richard to measure TCS table

15:13 Observing

16:36 Karen driving to VPW

19:24 running a2l

19:25 Miriam wants to run NOISEMON injections for test as per WP#6545

19:45 Betsy and Travis to MY

20:47 KingSoft here to check RO. He knows about Observation protocol for onsite driving.

20:56 Betsy and Travis back

22:45 Big glitch in BNS range (didn't catch it in DARM). h(t) shows glitch as fairly broadband

I have turned off the instrument air compressors at Mid Y. These will remain off until needed, (same as Mid X) but in a stand-by mode.

22:31UTC INJ_TRANS guardian doing it's thing.

Travis left just before the alert to drop some boxes at a Mid station and the balers headed in the direction of Y arm yet they may be by the H2 building. ( I can't find them on the camera)

[Jeff Kissel, Aaron Viets] Recent issues with the calibration lines and kappa calculations have raised questions about how the h(t)-ok bit is affected by the kappas (see https://alog.ligo-wa.caltech.edu/aLOG/index.php?callRep=35184 ). For CALIB_STATE_VECTOR documentation, see https://wiki.ligo.org/viewauth/Calibration/TDCalibReview#CALIB_STATE_VECTOR_definitions_during_ER10_47O2. For each kappa, there is a "median-ok" bit and a "smooth-ok" bit. The "median-ok" bit tells whether the kappas are being gated due to bad coherence (thus "corrupting" the median with previously measured values). The "smooth-ok" bit tells whether the kappa values are in an expected range (1.0 +- 0.2). Since the coherence was bad, but the gating worked during the time documented in the aforementioned aLOG, the median-ok bit was off and the smooth-ok bit was still on. When we apply the kappas, h(t) OK depends on the smooth-ok bits and not on the median-ok bits, so it was unaffected by this. If, on the other hand, we do not apply the kappas, the kappa bits do not affect the h(t) OK bit. The above description has been the case since the start of ER10, with the exception of one slight change: On 2016-11-29 Tuesday maintenance (near the start of O2), the smooth-ok bits were generalized further to also mark bad any time when the kappas are equal to their default values (indicating they have not yet been computed by the pipeline). This scenario is only expected to possibly occur at the start of the first lock stretch following a restart of the pipeline. The purpose of this change was to insure that "h(t)-ok" implies "kappas-applied quality" when we are, in fact, applying the kappas. For documentation on this change, see: https://alog.ligo-la.caltech.edu/aLOG/index.php?callRep=29947 https://alog.ligo-wa.caltech.edu/aLOG/index.php?callRep=31926

Eric Quintero, Rana

Summary:

Studying coherences between the ground and the LSC drives, we find that we can reduce the longitudinal forces to the mirrors in the 0.1-0.3 Hz band by a factor of ~2-5 in most cases, for both sites.

Details:

The recent success of the length to angle (L2A) feedforward at LLO led us to wonder about the source of so much low frequency motion. The SEI loop feedback and feedforward has been heavily tuned over the years to reduce much of the motion. By looking at the coherence and transfer function between the ground seismometers and the LSC*OUT signals (in a similar manner to what we did with the length to angle in May 2016), we are able to make an upper limit estimate on how much this can be reduced by implementing a global FF (just as we did in early 2010 for the S6 run using HEPI/PEPi).

We took 1 hour of data from 1000 UTC on March 12. The RMS of the ground motion in the 0.1-0.3 Hz band was 0.5 um/s, which is high, but only moderately high for the winter time. The summer time motion is more like 0.1 um/s.

The attached plots show:

upper plot: LSC control signal & LSC control signal after ideal subtraction

lower plot: top 5 signals used in the subtraction (in practice, using ~3 signals is enough to do the biggest part of the subtraction)

This subtraction is done on a bin-by-bin basis in the frequency domain, and as such, its a best case estimate. In reality, implementing a causal filter which avoids injecting too much noise at 3-20 Hz will degrade the subtraction performance somewhat. We are now working on making a frequency dependent weighting so as to make realizable filters.

In the attached channel list, you can see that all ground seismometers and tiltmeters were used.

The BRS's were being used at the time this data was taken, this is the nominal seismic configuration. The window you looked at was a relatively low wind time (~5 m/s according to the summary pages), so end station floor tilt probably wasn't a big effect. And based on a study RobertS did (alog 27170), we think tilt is only coherent over short distances, so the BRS probably wouldn't directly show up here. What we use the BRS for is doing tilt subtraction from the end station STS, and use that super-sensor for FF on the end station ISIs. We store that tilt subtracted ground signal in the science frames (since June 2016) as H1:ISI-GND_SENSCOR_ETMX/Y_SUPER_X/Y_OUT_DQ.

Evan, Miriam,

We continued the blip-like injections as in aLog 35116. This time we could inject a few different shapes.

In the first set we injected a sine-gaussian with frequency 200 Hz. Again we started quiet, only times with * can be seen in DARM:

1174937465

1174937493

1174937520

1174937554 *

1174937649

1174937693 *

1174937758 *

1174937786 *

1174937836 *

In the second set we injected one of the blip glitches that showed a strong residual after the subtraction of the ETMY L2 MASTER signal from the NOISEMON (see aLog 34694). Since we are injecting in the DRIVEALIGN, we took the shape of the blip in that channel and injected that. Times (all can be seen in DARM, they should have three different SNRs):

1174937920 *

1174937956 *

1174938062 *

1174938144 *

1174938198 *

1174938231 *

In the final set, we injected a filtered step function that was sent to us by Andy. The first time was too quiet and did not show up in DARM, the second shows up quietly, and the third was fairly loud:

1174938333.5

1174938365.5 *

1174938397.5 *

Right after injecting the last one, the spectrum was showing some mid frequency (100 - 300 Hz) noise, which can be seen together with the injection in this omega scan. We did not believe this noise was originated by our injection, and we saw similar noise about half an hour later when we were back in observing mode, but we will try to inject it again at another opportunistic time just as a sanity check (L1 came back up after our last injection so we didn't want to take any more time).

NOTE: same as with the set of injections mentioned in the aLog pointed above, and as pointed also in aLog 35223 (first comment), these injections were performed while L1 was down and with H1 in comissioning mode. This means that none of these injections happened in coincident time, and that they are not included in analysis ready segments (as can be seen, for instance, in the summary pages).

19:21UTC

H1 will stay out of Observing until NOISEMON testing/injections are completed OR Livingston comes back up

19:51 Intention bit Undisturbed

HAM 6 pressure spiked at around 23:00 UTC time on 3/29.

This looks like about the same time that we were testing the fast shutter. Do we get this kind of pressure spike during a normal lockloss?

We don't typically see spikes like this. We have been monitoring pressures across the site, including HAM 6, in 48 hr increments for a few months now to detect peculiar spikes like this.

Note that we saw several spikes like this before the failure of the OMC which Daniel attributed to "liquid glass": https://alog.ligo-wa.caltech.edu/aLOG/index.php?callRep=28840

Based on the last two stretches of the BRSY DRIFTMON, this signal is trending down at 180 cts/day, see attached. It is currently sitting at -13500cts and it looks like this is a pretty good spot in the disturb/recover cycle for forecasting.

Next Tuesday 4 April, it should be at ~-14500cts, that is okay for operation. If the BRS is not recentered until 11 April, the DRIFTMON will be ~-15700cts. Not sure if we can wait that long. Krishna is contemplating coming over for the 4 April Maintenance day for this.

15:13UTC

TITLE: 03/30 Day Shift: 15:00-23:00 UTC (08:00-16:00 PST), all times posted in UTC

STATE of H1: Commissioning

OUTGOING OPERATOR: Patrick

CURRENT ENVIRONMENT:

Wind: 16mph Gusts, 12mph 5min avg

Primary useism: 0.06 μm/s

Secondary useism: 0.28 μm/s

QUICK SUMMARY:

15:05UTC Richard McCarthy requested access to the LVEA. To make a physical measurement of a TCS table to correct a drawing. This was allowed due to LLO being down. Keita was consulted.

FAMIS #6891 H1:ISI-ITMX_ST1_CPSINF_V1_IN1_DQ is definitely elevated.

Thanks Patrick. Yes, this is elevated. It isn't extreme but it is certainly not like the others. Better we catch it here than wait for it to get bad and be noisy in band. We'll start with a gauge board un seat/reseat maneuver next opportunity.

FRS 7763.

[Cheryl, Daniel, Jenne, Keita, Nutsinee, Kiwamu]

As planned, we locked PRMI with the carrier light resonant. At the very end of the test, we had one good (but short) lock in which the PRC power reached 90% of that for the full interferometer case.

Here is a time line for the last good lock stretch.

See the attached for trend of POP_A_LF. Assuming a power recycling gain of 30 for the full interferometer, we can estimate the usual PRC power to be 30-ish W * 30 = 900 W during O2. Today we achieved 90% of it according to POP_A_LF. Therefore 900 W * 0.9 = 810 W. Considering the 50% splitting ratio at the BS, ITMX receives ~400 W during the last high power test today. In addition, we had a number of lock stretches that lasted longer but with smaller PSL power prior to the last high power test.

Also, technically speaking, MICH wasn't locked at a dark fringe. Instead it was locked at a slightly brighter fringe using the variable finesse technique (35198). Also also, during the last test, Daniel and Keita unplugged a BNC cable from the trigger PD by HAM6 which triggers the fast shutter in HAM6 such that the shutter can stay closed throughout the test to prevent some optics from damaging. After we finished today's test, Daniel put the cable back in so that we can go back to interferometer locking.

Hartmann sensor test results:

The last lock was initialized at 1174865790, reached full power approximately 210s afterwards and then was lost around 1174866190.

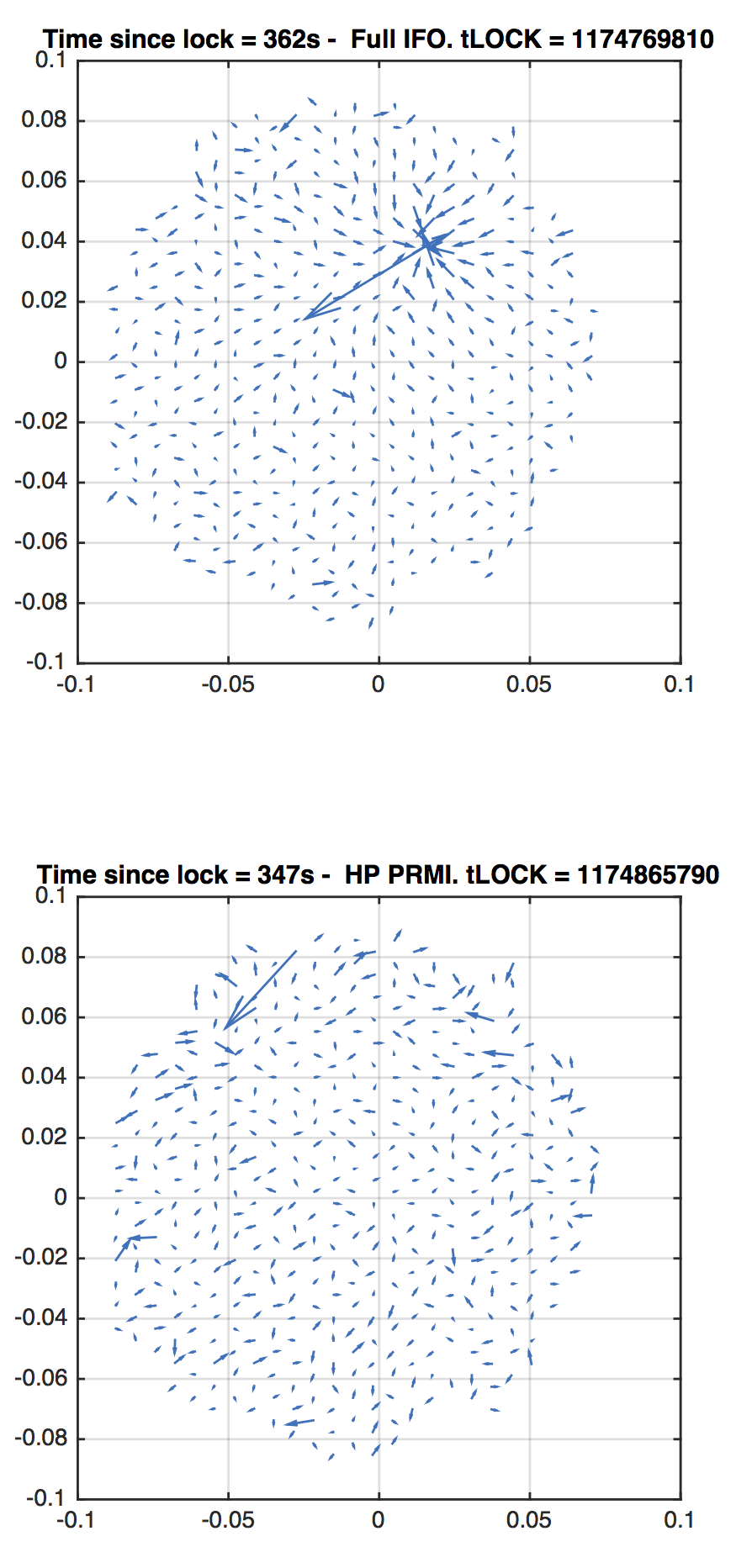

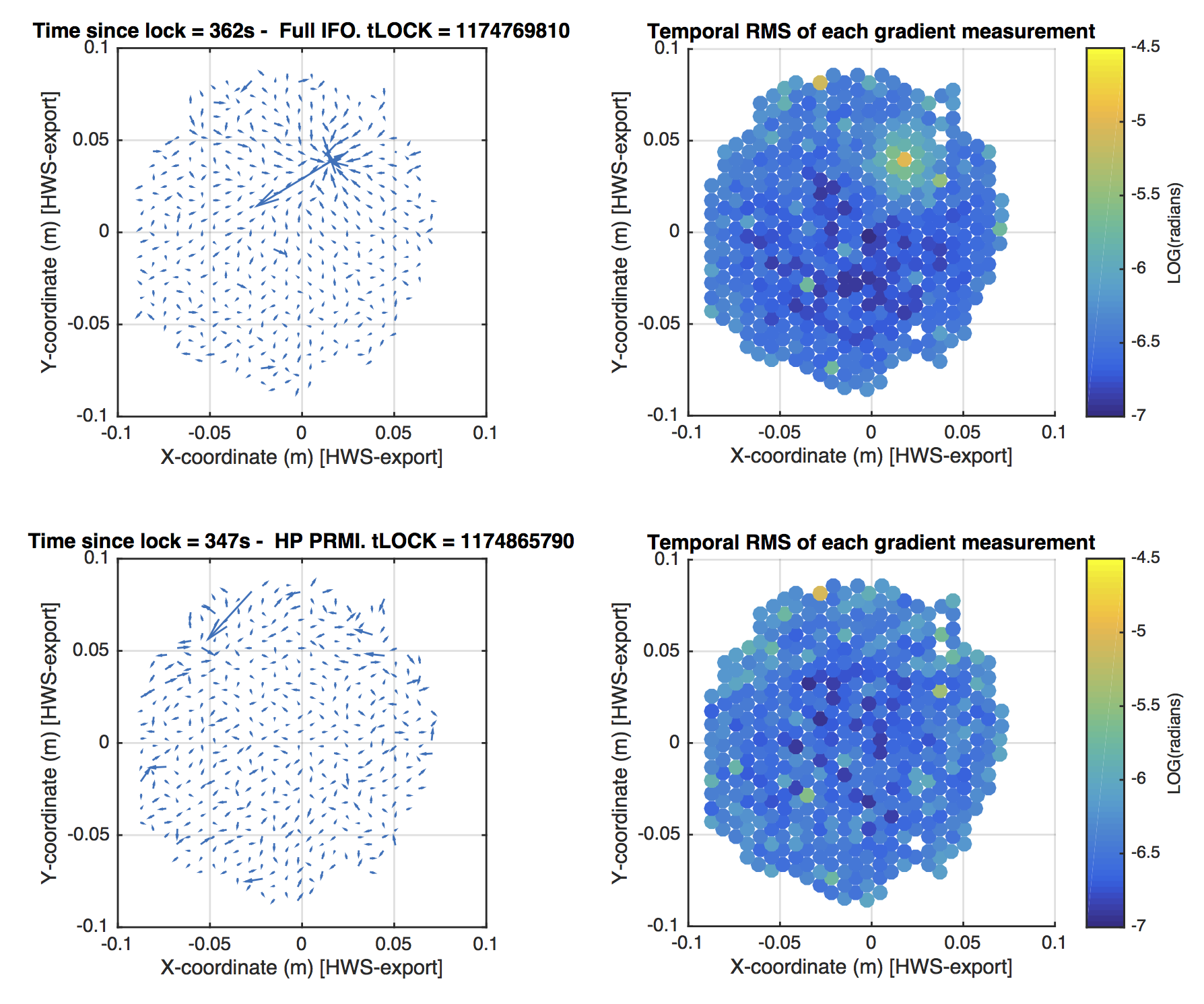

I've plotted the gradient field data for the PRMI test, 350s after locking (cleaning up errant spots). For reference, I've also plotted the gradient field data for the full IFO, 360s after locking. The arrows in both cases are scaled such that they represent almost exactly the same length gradients in the two plots.

The bottom line: the full IFO shows the thermal lens. The high power PRMI does not.

Here's a GIF animation from the time segment for your amusement. There's so little data point that the animation has to be slowed down a bit. The last 120s was some optic misalignment leading to the lockloss.

I'm adding the following plot to show the uncertainty in each of the individual measurements in the HWS gradient field. This should help guide your eye as to which data points are noisy relative to their neighbours. In other words, here is the spatial sensitivity of the Hartmann sensor. This is in the raw exported coordinate system of the Hartmann sensor (that is, scaled to the ITM size but not oriented and not centered).

For example, notice that the point around [-0.03, 0.08] is unusually noisy. So the large arrow associated with it is not signal but noise.

For each data point, the RMS in the gradient of that data point is determined for the 600-1000s of data that is available. That is, for each data point:

U_i = d(WF)/dx, V_i = d(WF)/dy, where i is the i-th measurement of that data point.

RMS = SQRT(VAR(U) + VAR(V))

Note that RMS of the gradient data near the thermal lens is larger because of the presence of signal, not noise.

[Kiwamu, Jenne]

How to lock the PRMI-only

If unlock, do the following (quickly if possible, to reduce the amount of time we're saturating the optics):

Note to self for the future: We weren't ever able to switch to an RF signal for MICH, and so were not able to lock on the true dark fringe. Since we were able to increase the PSL injected power enough to have enough power in the PRC for this test, and we were running low on time, we did not persue this.

Also, PR3 and BS needed to be hand-adjusted in pitch to keep their oplev signals constant as we reduced the MICH offset, and especially after we further increased the power. Rather than commissioning an ASC setup for this one-day test, we just had 2 human in the loop "servos".