Jason, Betsy

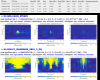

Yesterday during the maintenance period, Jason and I took a look at the beams coming from the ITMY and CPY in order to "map" where beams are there relative to their back scatter into the chamber in other places. This is similar to what LLO did when they repointed their CPY mechanically in-chamber in an attempt to mitigate scatter (alog 28417). Attached is our "map" which will eventually tie into a larger picture of scatter and "correct" pointing of CPY... TBD. A few facts that I dug up for the record:

LHO CPY CP05 has the thin side to the +X side

LLO CPY CP03 has the thin side to the +X side

The original installation of CPY and ITMY IAS pointing (with 0,0 OSEM alignment offsets) match ~roughly were we find them now (with 0,0 OSEM alignment offset), namely the CPY pit was offset from ITMY-AR by a couple mRad. No major drift over 2 years in alignment, although it is further out from this now by a factor of 2, not sure why. ITMY alignment has changed though, due to commissioning/locking work over 2 years. Recall, the original large pointing spec of the CP of "within ~1.4mRad" was for other factors such as ESD capability, and not necessarily for scatter. We may be refining this spec now for scatter mitigation.

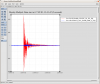

Our map as shown in the attached:

CPY-HR is 2.2mRad away from ITMY-AR in PITCH, while not too far off in YAW (well under a mRad). IAS spec was ~1.4mRad, we insatlled it at ~0.9mRad.

The CPY pitch pointing could be repointed to match the ITMY-AR better which may improve scattering, although we do not currently have enough electronic range. So this will need to be done mechanically during a vent. We could point the CPY as far as electronically possible and re-evaluate for scatter.