J. Kissel

After Keita begin using DELTAL_EXTERNAL_DQ for his subtraction studies, I was reminded that we need to update the control room wall FOM template for the recent DARM loop model changes (see LHO aLOG 33585 and 33434). Also, it reminded me that here at LHO, we have not switched over to using the more precise method of adding the CAL-CS DELTAL_CTRL vs DELTAL_RESIDUAL developed at LLO (see LLO aLOGs 28268 and 28321) that allows us to correct for all of the high-frequency limitations of the front-end CAL-CS DARM loop model replica (see II/ET Ticket 4635) without being limited by integer clock cycles.

As such, I copied a script from Shivaraj,

/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/ER10/L1/Scripts/CALCS_FE/CALCSvsDARMModel_20161125.m

that computes what this relative delay should be as a function of frequency. Because it needs interferometer specific information as he's written it, I've put H1's version for the latest reference model time (2017-01-24, after the 4.7 kHz notch was added to the DARM filter bank, again see LHO aLOG 33585) in an appropriate place,

/ligo/svncommon/CalSVN/aligocalibration/trunk/Runs/O2/H1/Scripts/CALCS_FE/CALCSvsDARMModel_20170124.m

and updated it accordingly. The function also has the bonus of checking the CAL-CS model implementation against the supposedly perfect Matlab model.

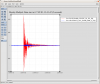

I attach the output.

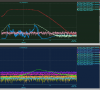

The first plot shows the ratio between the CAL-CS replica (re-exported back into matlab) and the perfect matlab DARM loop each for each actuation and sensing path. This ratio should expose all the the CAL-CS model is missing:

- computational delays,

- the light-travel time delay

- the pre-warping of high-frequency roll-off filters

- the response of uncompensated high-frequency electronics, and

- the digital and analog AA or AI filters.

That which CAL-CS is lacking is accounted for in the GDS pipeline to form the product consumed by the astrophysical searches, GDS (or DCS)-CALIB_STRAIN. However, since the front-end / CAL-CS is incapable of precisely handling these effects for the real-time control room product DELTAL_EXTERNAL, and they're predominantly high-frequency effects, we, in the past have approximated them with adding a relative delay between the sensing and actuation paths, as explained by LHO aLOGs 32542, 21788 or 21746.

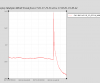

The second plot shows the same sensing function ratio, but now with the entire actuation function summed together.

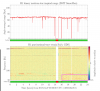

The phase difference between the two function on page two, as a function of frequency, is recast as a delay and converted to 16 kHz clock-cycles in the third attachment.

Ideally, this phase difference / delay would be flat as a function of frequency, so we'd feel good about applying a single delay number in-between the paths, even if it was a non-integer 16 kHz clock cycle. This is what LLO's looked like in LLO aLOG 28321, and what they installed according to the last paragraph of LLO aLOG 29899.

However, LHO's is *not* flat, so I'm not sure what to do.

Also, at the DARM loop Unity Gain Frequency (68 Hz) where you would expect the delay needs to be perfect at the crossover between actuation and sensing functions -- the phase difference or delay is exactly 427 [usec], which is 7 16kHz clock cycles, which is at what the delay is currently set.

So... do we need an update? I'll converse with Joe and Shivaraj and decide from there.

-------------

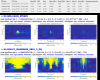

The DARM model and parameters used to generate the plots in this aLOG are:

trunk/Runs/O2/H1/params/2017-01-24/

modelparams_H1_2017-01-24.conf (r4241, lc: r4241)

trunk/Runs/O2/DARMmodel/src/

DARMmodel.m (r4241, lc: r4241)

computeDARM.m (r4241, lc: r4241)

computeActuation.m (r4241, lc: r4241)

computeSensing.m (r4093, lc: r4025)

trunk/Runs/O2/H1/Scripts/CALCS_FE/

CALCSvsDARMModel_20170124.m (r4263, lc: r4263)