Since we didn't have the interferometer today, I stiched together some previous measuremnts of frequency noise and transfer function. In particular:

alog 30610: Out-of-loop frequency noise sensors (POP9, REFL45, POP45): Caveat: The alog only accurately calibrated them at high frequency - I suspect the sensor disagreement at low frequency is a deviation form the simple scalar calibration.

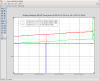

alog 29893: Transfer function in m/W (the template also has m/ct). Caveat 1: This was measured at 50W - need it at 25W, Caveat 2: The transfer function is known to vary, see alog 30440.

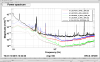

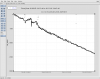

That said, I can ignore all the caveats for now (until we redo the measurments at 25W) and use the three out-of-loop frequency noise sensors to predict the DARM contribution. Specifically, I cast POP9, REFL45 and POP45 into REFL9 counts using alog 30610, and then apply the transfer function from aLog 29893. I woudn't trust the result better to about a factor of 3 - mostly because we don't have an accurate frequency noise calibration of those sensors at low frequencies. But the result is in the attached plot.

Clearly, this needs to be redone with more precision. But if this theory holds up, then what we are seeing is REFL9 sensing noise (due to scatter or donut jitter or something else), being imprinted on frequency noise, coupling to DARM.