WP12362 Upgrade h1susex to latest 5.30 RCG

To address a difference in the RCG5.3.0 running on h1omc0 and h1susex, all models on h1susex were built against the latest 5.3.0. h1susex was rebooted as part of this upgrade. No DAQ restart was needed.

WP12358 Move cameras to new AMD servers

All the cameras from h1digivideo0 and h1digivideo1 were added to the four already running on h1digivideo4. This brings the number of cameras on this server to 15. All cameras are running at 25 frames-per-second limits.

The cameras on h1digivideo3 (deb11) were moved to a new server, h1digivideo5.

The h1digivideo[0,1,3] machines were powered down. No changes were made to h1digivideo2.

A new camera MEDM was built showing the new layout.

See Jonathan's alog for details.

WP12356 Install independent 1PPS generator at EY

An independed 1PPS function generation was installed at EY and connected to the 5th port of the comparitor, next to the CNS-II clock. This will be used to invistigate when the CNS-II occassionally has a 500nS offset applied.

WP12355 Picket Fence Upgrade

Erik upgraded the picket fence system.

WP12332 BSC Temperature Monitors

Fil worked on the BSC1 (ITMY) temp monitor, it is now reading the correct temp.

DAQ Restart.

A EDC DAQ restart was needed for three changes:

H1EPICS_DIGVIDEO.ini: reflect the move of cameras from old to new software.

H1EPICS_CDSMON.ini: remove h1digivideo3's epics load mon channels, add those for h1digivideo5

H1EPICS_GRD.ini: add channels for new Guardian node PCALY_STAT

The DAQ restart followed our new EDC procedure, whereby the trend-writers were stopped before the edc was restarted with its new configuration.

No major surprises except FW1 sponteneously restarted itself after running for about seven minutes.

Tue04Mar2025

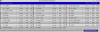

LOC TIME HOSTNAME MODEL/REBOOT

08:00:38 h1susex h1iopsusex <<< First we restarted h1susex models with new RCG

08:00:52 h1susex h1susetmx

08:01:06 h1susex h1sustmsx

08:01:20 h1susex h1susetmxpi

08:08:12 h1susex h1iopsusex <<< Then we rebooted h1susex

08:08:25 h1susex h1susetmx

08:08:38 h1susex h1sustmsx

08:08:51 h1susex h1susetmxpi

13:46:16 h1susauxb123 h1edc[DAQ] <<< New video, load_mon and grd ini loaded

13:47:04 h1daqdc0 [DAQ] <<<0leg start

13:47:15 h1daqfw0 [DAQ]

13:47:15 h1daqtw0 [DAQ]

13:47:19 h1daqnds0 [DAQ]

13:47:24 h1daqgds0 [DAQ]

13:48:08 h1daqgds0 [DAQ] <<< gds0 needed a second restart

13:51:17 h1daqdc1 [DAQ] <<< 1leg start

13:51:26 h1daqfw1 [DAQ]

13:51:27 h1daqtw1 [DAQ]

13:51:28 h1daqnds1 [DAQ]

13:51:35 h1daqgds1 [DAQ]

14:00:36 h1daqfw1 [DAQ] <<< spontaneous restart of fw1

LVEA and CER CDS Wifi Problems

Erik reconfigured the LVEA and CER WAPs from DHCP to static IP addresses. This resolved the immediate connection problem between wap-control and these units. Details can be found in the FRS ticket.