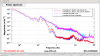

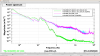

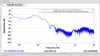

Yesterday, I switch the HAM3 ISI to use the "HAM5" STS (now, actually in the biergarten), did an initial measurement to make sure the ISI was performing okay, then left it overnight. This morning I compared it's performance to HAM2, and I think we should switch all of the ISIs to used this seismometer. Attached 3 spectra show the GS13 spectra for X,Y and Z over a ~1 hour period overnight. In all DOF's over most frequencies, HAM3 does better than HAM2 now, where previously they had performed similarly (excepting HAM3's transient .6hz mystery line). Most important, HAM3 does much better where sensor correction gets us most of our isolation (~.1-1hz). This is an easy change, and should be totally transparent.

I can't do more than just speculate at the moment, but I wonder if HAM3's better performance of the sensor correction than HAM2 isn't because the sensor is better but because HAM3 is ~15 [m] closer than HAM2. Is HAM2 using the "HAM2" STS-A, and is it still right under HAM2? I've lost track of Hugh's STS juggling...

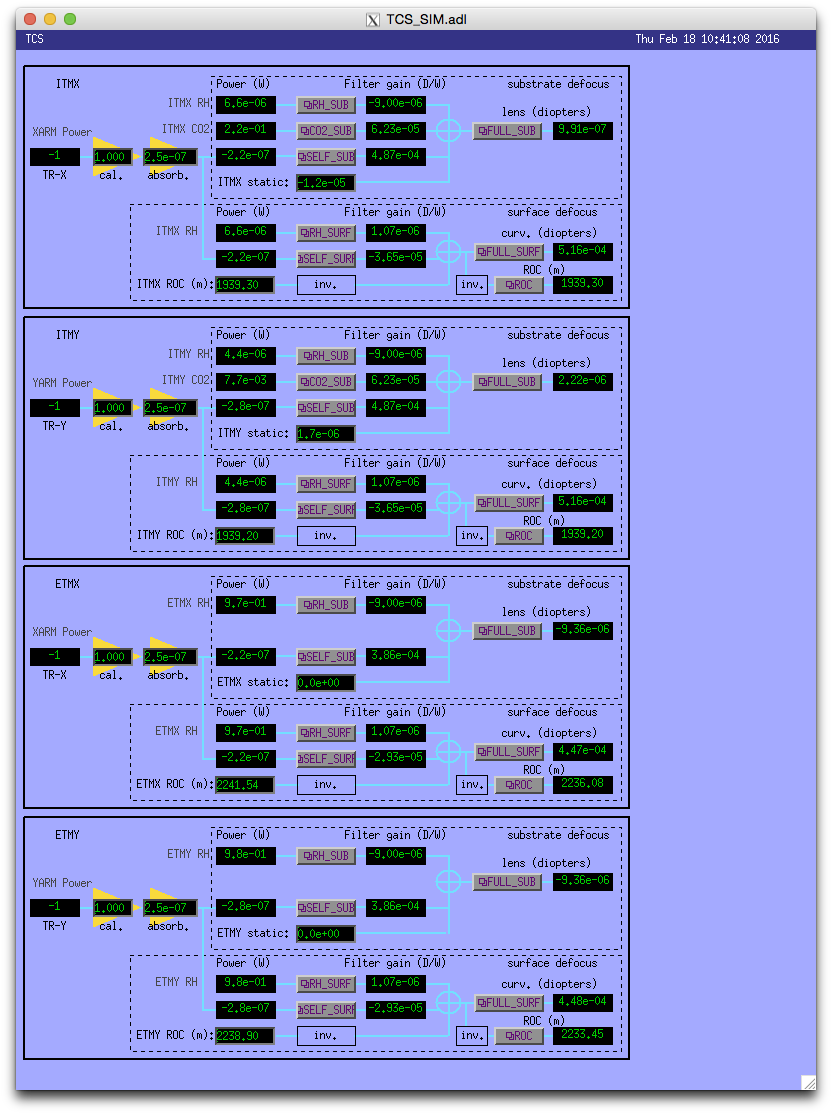

All corner station platforms were using the ITMY (STS2-B) sensor, but Jim has been switching the platforms over to the HAM5 (STS2-C) sensor which has been moved to the BierGarten near STS2-B.