Robert, Sheila, Evan, Gabriele

I tried to look at one of Robert's injections from yesterday, and we noticed a dangerous bug, which had previously been reported by Annamaria and Robert 20410. This is also the subject of https://bugzilla.ligo-wa.caltech.edu/bugzilla3/show_bug.cgi?id=804

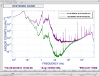

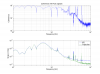

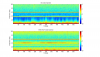

When we changed the Stop frequency on the template, without changing anything else, the noise in DARM changes.

This means we can't look at ISI, ASC, PEM, or SUS channels at the same time as DARM channels and get a proper representation of the DARM noise, which is what we need to be doing right now to improve our low frequency noise. Can we trust coherence measurements between channels that have different sampling rates?

This is not the same problem as reported by Robert and Keita alog 22094

people have looked at the DTT manual and speculate that this could be because of the aggressive whitening on this channel, and the fact that DTT downsmaples before taking the spectrum.

If there is no near term prospect for fixing the problem in DTT, then we would want to have less aggressive whitening for CAL_DELTA_L_EXTERNAL

I spent a little time looking into this and added some details to the bug report. As you said, it seems to be an issue of high frequency noise leaking through the downsampling filter in DTT.

Until this gets fixed, any reason you can't use DARM_IN1 instead of DELTAL_EXTERNAL as your DARM channel? It's better whitened, so it doesn't suffer from this problem.

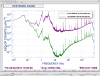

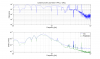

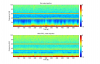

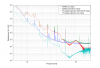

The dynamic range issue in the whitened channel can be improved by switching to five zeros at 0.3 Hz and five poles at 30 Hz.

The current whitening settings (five zeros at 1 Hz, five poles at 100 Hz) produce more than 70 dB of variation from 10 Hz to 8 kHz, and 130 dB of variation from 0.05 Hz to 10 Hz.

The new whitening settings can give less than 30 dB of variation from 10 Hz to 8 kHz, and 90 dB of variation from 0.05 Hz to 10 Hz.

We could also use 6 zeros at 0.3 Hz and 6 poles at 30 Hz, which would give 30 dB of variation from 10 Hz to 8 kHz, and 66 dB of variation from 0.05 Hz to 10 Hz.

The 6x p/z solution was implemented: LHO#25778