I've been working on the Guardian script for locking the CO2 lasers. It now has the following states programmed:

DOWN : Resets the laser pzt position to center. Resets the chiller temperature to 20C. Turns off all servos

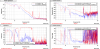

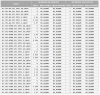

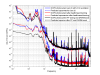

FIND_LOCK_POINT : Scans the internal pzt of the laser while measuring the power output using the thermopile. The thermopile is a little slow, so this takes a few seconds to do. It then looks at the slope of the resulting curve to work out if there is a suitable place to lock. It can lock to a positive or negative slope. If there is no good slope then it steps the chiller temperature by 0.03C (we may want to increase this) waits for 4minutes because there is a significant time lag (this may be too short to reach equilibrium temperture) and then retries to find a good point to lock the laser. It finishes by setting a setpoint value for the desired laser power and leaves the PZT at the voltage corresponding to this power.

LOCK_LASER: Engages an integrator between the thermopile readout and the PZT.

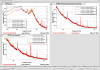

RESET_PZT_VOLTAGE : Slowly hands off the DC offset in the PZT voltage from the offset box to the integrator. The offset ends up at 37.5V, which is the centre of the PZT range. This offset plus the integrator output will now correspond to the PZT voltage for the desired laser power.

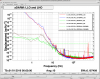

ENGAGE_CHILLER_SERVO: The voltage from the PZT integrator is used as the error signal to drive the servo for the chiller. The servo is engaged and gains are set.

ISS_ENGAGE : We don't yet need the intensity stabilization system so this state is just bypassed

LASER_UP : This state will be used to monitor the system while in use, looking to see if the power output of the laser exceeds certain boundaries, or whether the laser PZT reaches the limits of its range, which would indicate that the servo has lost lock on the laser.

We still need to setup the gains on the integrator stages for the laser pzt and the chiller. The chiller one is particularly awkward to monitor because the unit gain frequency is so low. The guardian script is currently located in the TCS folder /opt/rtcds/userapps/trunk/tcs/common/guardian/TCS_CO2.py

Tagging GRD