Today I perfomed several broad-band noise injections to check how large the coupling of MICH, PRCL and SRCL noises are to DARM, and to check if they are stationary.

In summary:

- MICH and PRCL couplings are stationary (spectrograms in fig. 1 and 2)

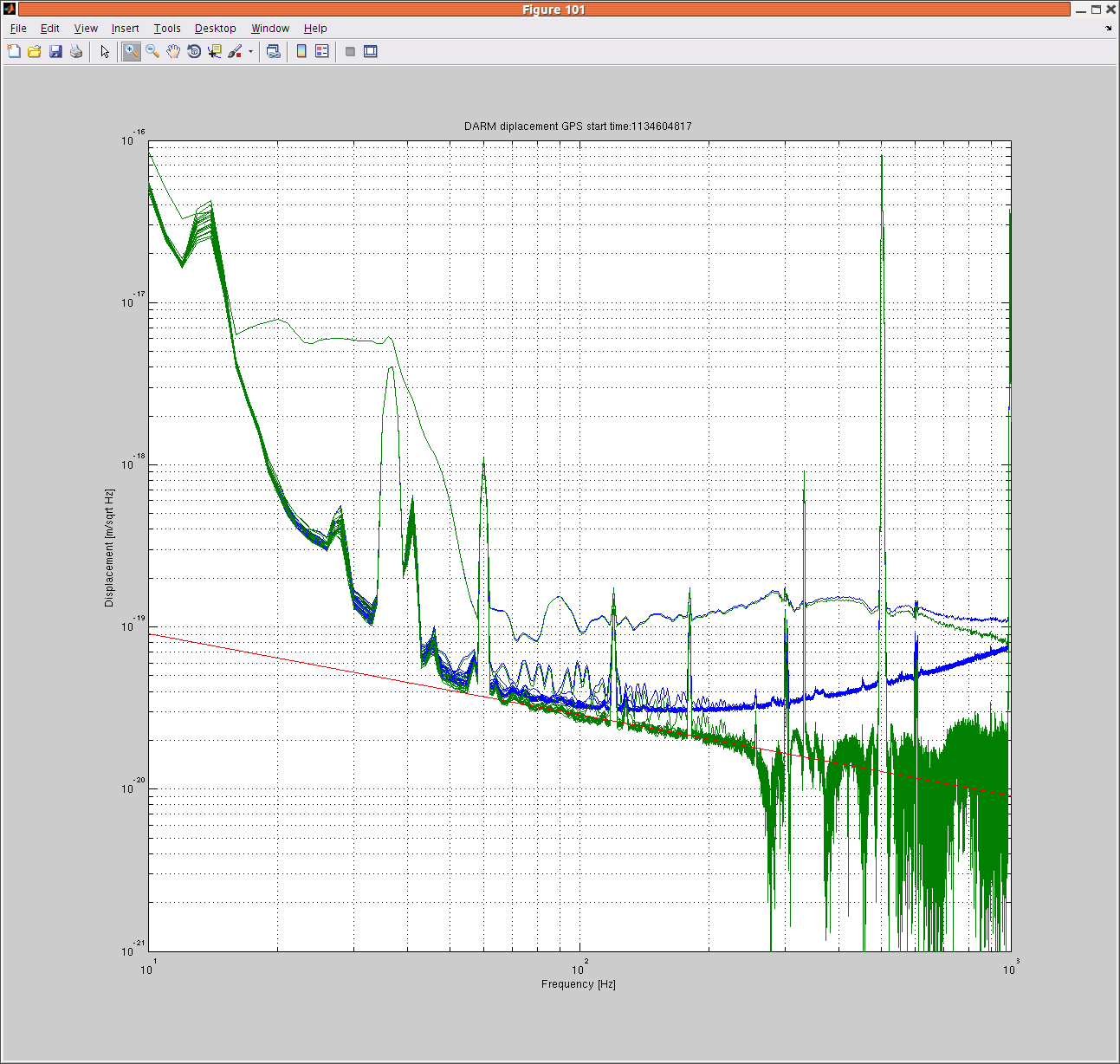

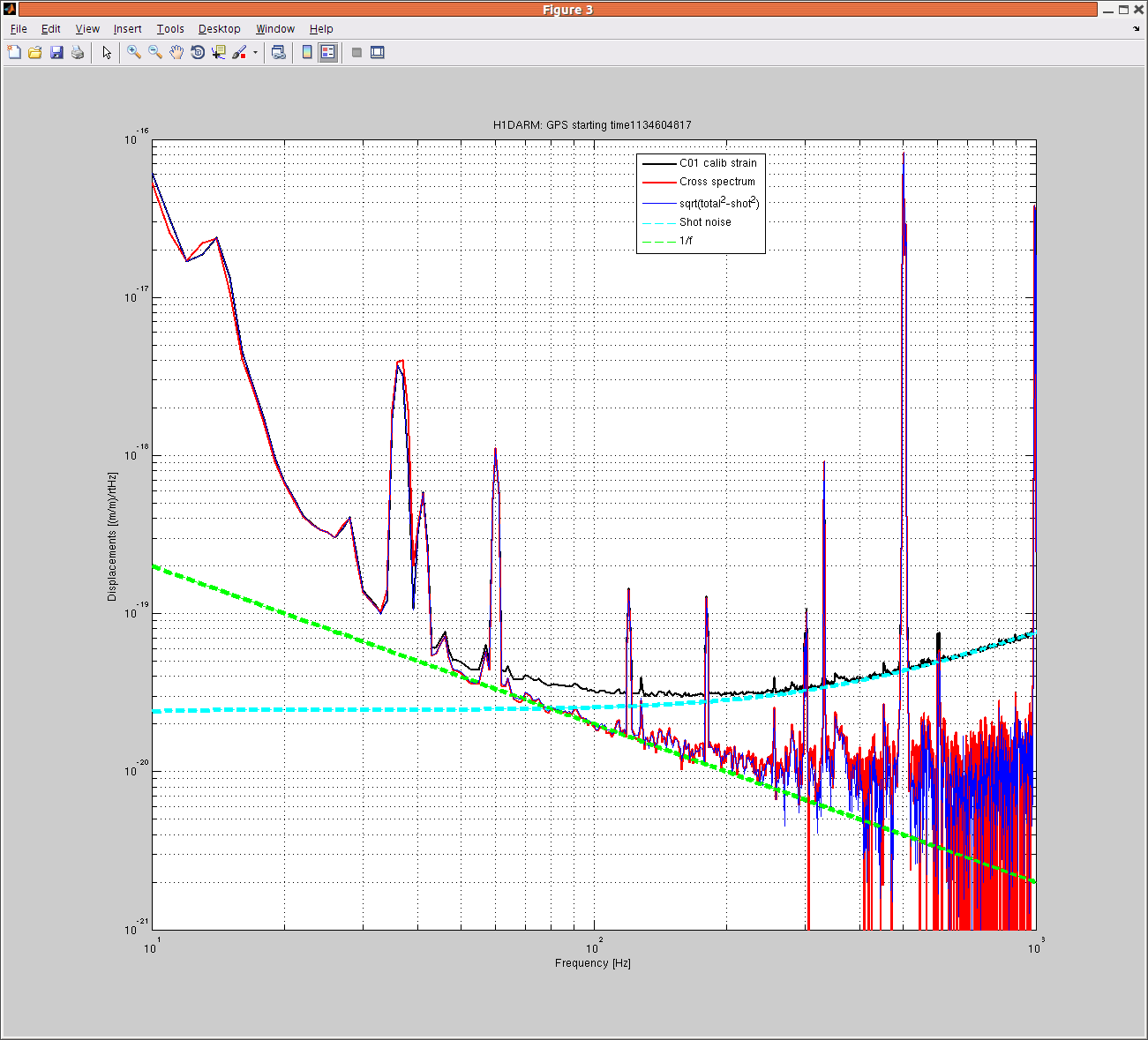

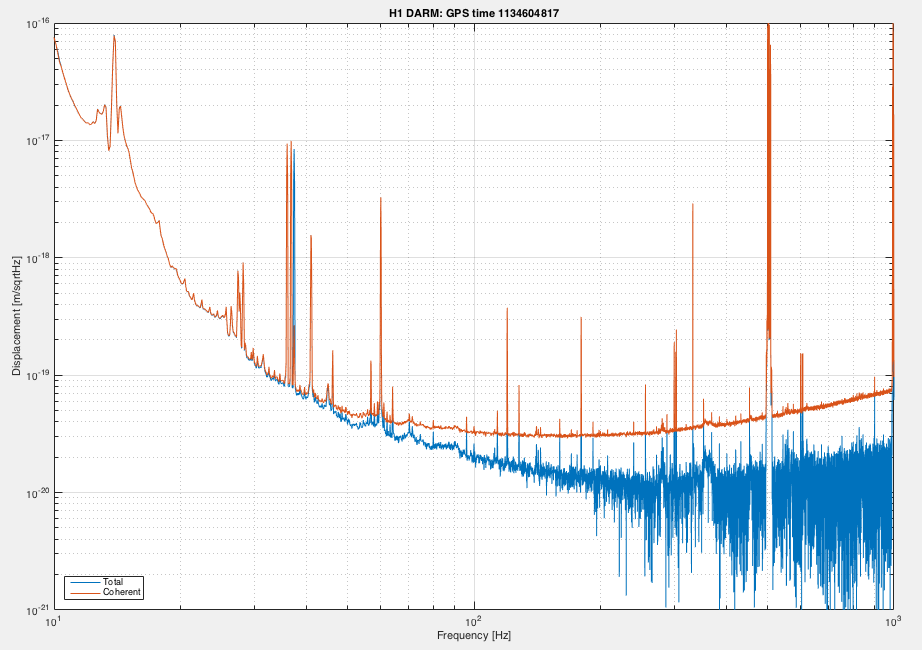

- MICH and PRCL are both contributing significantly to DARM noise below ~20 Hz (probably through the same mechanism, fig. 3 and 4, where the noise projection is shown)

- SRCL coupling shows a non stationary behavior above ~70 Hz (fig. 5), but the SRCL noise projection is well below the DARM noise (fig. 6)

Some more details follow.

While injecting noise in an AUX channel, if the coherence with DARM is good, we can estimate the linear transfer functon TF. Then we can check if the PSD of DARM during the noise injection matches the PSD of AXU times the transfer function: the two should be equal if the coupling is dominated by a linear term. If there is strong non-linearity or strong non-stationarity, the two can be different. This is the case for SRCL (fig. 6).

The plan for the next days is to repeat this same kind of tests with ASC degrees of freedom.

As a side note, while injecting PRCL and SRCL I caused two lock losses. In both cases I'm quite confident that nothing was saturating. However, after the aborted SRCL injection, we relocked and found a mode at 41.02 Hz largely excited (SNR ~ 1000). This mode is unidentified, but seems to roughly match the roll mode of the triple suspensions.

For future reference, attached the logfile of the injections.

Maybe the ring-up hints at the origin of one of the two lines near 41 Hz that we suspect is a roll mode of a triple suspension. This line is discussed in alog 21696 and its comments. We should check that the mode just below 41 Hz is not also rung up. I wasn't immediately able to find which times to compare - could someone post good times to look at?

The line at 40.X Hz was not excited by the injection. Only the line at 41.02 Hz was.

The line at 41.02 Hz was very large during the first lock right after 137193090 Mon Jan 18 14:57:53 PST 2016