TITLE: 11/14 OWL Shift: 00:00-08:00UTC (16:00-00:00PDT), all times posted in UTC

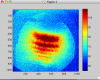

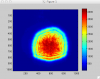

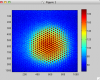

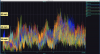

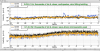

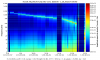

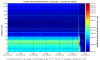

STATE of H1: High winds continue (now averaging about 30mph). LVEA useism is at 1.0um/s. For locking, we need winds below 20mph and useism down to 0.7um/s.

Incoming Operator: Cheryl Monitoring From Home

Support: Talked with Mike on the phone. Nutsinee was also here.

Quick Summary:

Shift was awash, with no locking attempts at all due to high seismic activity which has been going on 24+hrs! I have occupied an FOM screen (nuc0 & it can be view here) so Cheryl can monitor seismicity & ALS locking from home. If the winds ever calm down during her shift, she will come in.

Activities:

- Topped of Crystal Chiller

- 2:15 Nutsinee out of LVEA for HWS work

- Nutsinee noticed tripped TCS-X laser. Talking with Mike/Alastair about fix

- 2:20 Taking HAM2, HAM5, & HAM6 ISIs to ISOLATED now that Nutsinee is done. Mode Cleaner locked. Waiting for TCSx to get sorted before working on alignment.

- Went out on floor to bring back TCSX (see Nutsinee's alog).

- Guardian was giving low ISS diffracted power, so made an adjustment to REFSIGNAL

- Attempted Initial Alignment but ALS locking not possible due to seismic. ALS locks would last seconds to a couple of minutes before dropping out.

Winds had died down - and were showing max. of about 22mph on site - now climbing back up.

Current winds around the Hanford site are mostly avergaes around 14mph and max. winds of 20-26mph, except for one station that says max. inds of 32mph, which is the highest I could find.

Y arm looks like it's been locked for an hour.

Since Y arm looks good and winds are still less than an hour ago, I'm heading to the site and will try to make progress on aligning and locking.