Summary--Looks like both HAM2 and the ITMY STSs (STSA & B) have problems with their Y and X dofs respectively. The HAM5 unit is the one solid sensor but I wonder what it will look like if we moved it. Of course it would be that Robert believes the ITMY location in the BierGarten is the ideal place most free from tilt affects. The End sensors look okay but this is not a great way to compare. We need a good unit to move closer for comparison. We still have a unit at quanterra. Further, I'll seek out Robert's review for better scrutiny.

More detail:

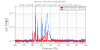

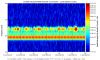

Looking at the upper left graph, ASD of the Y dofs: Ham2 Y has elevated noise (WRT other units) from 3 down to 0.4 hz. It is also noisier below 90 mhz, I don't think that is tilt, others would suffer as well I'd think if it was.

At the lower left graph, the Y dof coherences further suggest Ham2 is under performing. The end station units coherence gets above 0.8 below 100mhz and thereafter follows the like coherence between ITMY & Ham5. That seems reasonable.

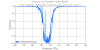

The middle column of plots for the X dofs suggests that the ITMY unit is not as healthy as the HAM2 or HAM5 around 100 to 300mhz. HAM2 looks much better for the X dof than the Y dof. The Ends X dof behaves much like the Y dof.

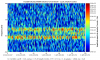

For the Z dof in the third column, while nothing jumps out in the spectra, the reduced coherence for the red & blue relative to the green trace suggests the ITMY Z is mildly ill. For the end stations in Z, it looks like tilt is plaguing things below 40mhz but this data is from 2am this morning and was not subject to much wind...So I'm not sure what to suggest there...