Highly related: alog 21234

Conversion coefficients to obtain the equivalent beam rotation at the periscope from IMC WFS signals were measured at around 20Hz while IFO was operating in low noise (see subentry for details):

|

|

PIT (nrad/ct) |

YAW (nrad/ct) |

|

IMCWFSA |

5.8 |

4.6 |

|

IMCWFSB |

3.5 |

1.4 |

There could easily be 10 or 20% error but this cannot be off by an order of magnitude.

Note that the PIT to YAW and YAW to PIT coupling in the sensing are not small at all. PIT to YAW sensing coupling is about 0.6 for WFSA and 0.9 for WFSA, while for YAW to PIT the numbers are 0.5 for WFSA and 0.4 for WFSB. (This is not surprising as the WFS quadrant gains are fishy: alog 20065). If we need to evaluate PIT and YAW jitter separately, it's somewhat better to use WFSB for PIT and WFSA for YAW.

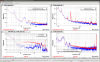

The attached shows WFSB PIT and WFSA YAW, already calibrated to radians using the above table (assuming that WFS response as a sensor is flat), and OMC DCPD in full lock. There are two lines injected to Peri PZT, @20Hz for YAW and 22.1Hz for PIT. The excitation voltage is the same for both, but due to the fact that peri mirror is tilted by 45degrees in PIT, beam deflection in YAW is sqrt(2) smaller than PIT.

Taking this into account, PIT to DCPD coupling is a factor of 7.5 smaller than YAW coupling. Looking at the peaks, indeed there are peaks in WFSB PIT, most notably at 280Hz, that are not present in DCPD even though the hight was as large as annoying twin peaks at 315 and 350Hz, and this is consistent with the smaller PIT coupling.

The attached also shows IMC trans SUM. PIT peak (22.1Hz) is much larger than YAW (20Hz) even you take a factor of sqrt(2) into account. This is probably due to the fact that MC WFS offset for PIT is much larger than YAW. Even then, PIT coupling is smaller. This is one of several things that show that this cannot be a simple jitter AM conversion by MC.

Among many calculations that could be done, I'll probably convert the input beam jitter to the beam jitter on OMC.