[Sheila, Dan, Evan, Jenne, others]

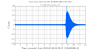

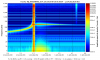

All afternoon (basically, since we started relocking after maintenence day) we have seen a 1 Hz comb (offset by 0.5 Hz) in the DARM spectrum, as well as in many, many other places. This seems like the same thing as the final bullet point that was reported in aLog 20790 a few days ago, but with much taller peaks.

Much of the day was spent looking into this, by several people. The lines are very clear in all of the length error signals, as well as the ASC error signals.

One thing that we tried, just to exonerate it, was swap the PRC length actuation from the PRM to PR2, and then turn off the PRM M3 coil driver. As expected, PR2 affects the cavity length twice as much as the PRM, so we needed a gain of 0.5 in the M3 LOCK filter bank for PR2, rather than the 1 that PRM has. We were able to turn off the PRM M3 coil driver, but saw no change in the length signals' spectra. We put the actuation back on PRM, but when we tried to turn off the PR2 coil driver, PR2 tripped and we lost lock. So, the new PRM coil driver is certainly not to blame for this new noise. (PR2 was untripped, and its coil driver turned back on) Also, we haven't seen the glitches due to the old PRM coil driver at all today, so hopefully they're gone for good, and not just hidden.

At Richard's suggestion, we tried restarting the models that were restarted earlier today in case it is some kind of synchronization problem. We restarted h1prm, h1pr2, h1lsc, h1asc. This didn't change anything - the lines are still there loud and clear after relocking.

The 1 Hz comb is still present, and we are running low on ideas for what it could be, and what to do about it. DetChar friends, and anyone with ideas, please let us know if you have a suggestion of where to look.

--------------

I don't know if this has the same cause as the 1Hz comb, but there are also some unusually large lines at ~838 Hz and ~855 Hz. These lines are a few Hz wide, and are coherent with magnetometer signals. But, the magnetometers have seen these lines at the same (or larger) amplitude, in the past, without seeing the lines in DARM. Why would the coupling from magnetic fields have changed so drastically??

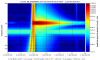

This is nice, it gives us a sense of how often the calculations for the handoff to DC readout fail. Answer: about once a month.

We should have the OMC guardian check that the calculated value for OMC_READOUT_ERR gain is sensible before writing it to the epics channel.