J. Oberling, R. Short

Since we've convinced ourselves that the new glitching is coming from the recently-installed NPRO SN 1661, we are swapping it out for our final spare, SN 1639F. This is the NPRO originally installed with the aLIGO PSL back in the 2011/2012 timeframe; it was removed from operation in late 2017 (natural degradation of pump diodes) and sent back to Coherent for refurbishment, and has not been used outside of brief periods in the OSB Optics Lab since.

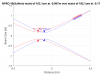

We first installed the power supply for this NPRO and tweaked the potentiometers on the remote board to make sure our readbacks in the PSL Beckhoff software were correct. We then swapped the NPRO laser head on the PSL table and got the new one in position. The injection current was set for ~1.8W of output power; we need 1.945A for ~1.805 W output from the NPRO. We test the remote ON/OFF, which worked, and the remote noise eater ON/OFF, which also worked. We optimized the polarization cleanup optics (a QWP/HWP/PBSC triple combo for turning the NPRO's naturally slightly elliptically polarized beam into vertically polarized w.r.t. the PSL tabletop). The power was turned down and the beam was roughly aligned using our alignment irises (with the mode matching lenses removed). At this point we did a beam propagation measurement and Gaussian fit in prep for mode matching to Amp1. The results:

- Horizontal

- Waist: 176.0 µm

- Position: -124.3 mm

- Vertical

- Waist: 182.0 µm

- Position: -169.3 mm

- Average

- Waist: 178.8 µm

- Position: -147.2 mm

Using this we got a preliminary mode matching solution in JamMT, using the same lenses we used for NPRO SN 1661, so we installed it. I managed to get a picture before JamMT crashed on us, see first attachment. Before tweaking mode matching we checked our polarization into Faraday isolator FI01. We have ~1.602 W in transmission of FI01 with ~1.701 W input, a throughput of ~94.2%. We then proceeded with optimizing the mode matching solution. It took several iterations (7, to be exact), but we finally were able to get the beam waist and position correct for Amp1 (the target is a 165 µm waist 60mm in front of Amp1, or 1794.2mm from the NPRO):

- Horizontal:

- Waist: 166.0 µm

- Position: 1789.0 mm

- Vertical

- Waist: 163.0 µm

- Position: 1800.0 mm

- Average

- Waist: 164.6 µm

- Positoin: 1794.5 mm

To finish, we set up a temporary PBSC to check that the polarization going into Amp1 was vertical w.r.t. the PSL tabletop. We put a power meter in transmission of the temporary PBSC and adjust WP02 to minimize the transmitted power; the lowest we could get is 0.41 mW (with a roughly 1.6 W beam) in the wrong polarization, which matches what we had during the most recent NPRO swap, so we were good to go here. We forgot to measure the final lens positions before leaving the enclosure, we will do that first thing tomorrow morning. The new NPRO is now set, aligned, and mode matched to Amp1 and we will continue with amplifier recovery tomorrow.

We left the NPRO running overnight with enclosure in Science mode; the first shutter (between the NPRO and Amp1) is closed.