I installed the changes to the HWSY optical layout per T1400686. To do this, I:

- removed the original AirLens from the HWSY layout

- installed the PLCX-50.8-772.6-UV lens on the HWS periscope approximately 330mm from the upper periscope mirror (UPM)

- realigned the 830nm probe beam to the irises on the HWS table and onto the lower periscope mirror (LPM) [I adjusted the position of the main HWSY BS to move the probe beam closer to the center and away from the edge of this optic].

- checked that the beam wasn't clipping on any of the optics on the table.

- adjusted the UPM and LPM to align the leakage ALS-Y (green) beam through the irises

- adjusted the final mirror before the HWS camera to center the green beam onto the camera.

- set the probe beam to 12,000 counts (on H1:TCS-ITMY_HWS_SLEDSETCURRENT). The measured PD voltage of the SLED was 2.7V (roughly 40% of maximum).

-

set the HWS camera frame rate to 1 frame per second. I used the

wuscommand on the HWS camera to set this to the default frame rate to use whenever the camera powers on. -

on H1HWSMSR, I ran the

stream_image_Ycommand in/opt/Hartmann_Sensor_SVN/release/bin/stream_image_Y/distrib/to view the image from the camera in real-time - I observed a return beam from the ITMY region that appeared and disappeared as I turned the HWS SLED on and off.

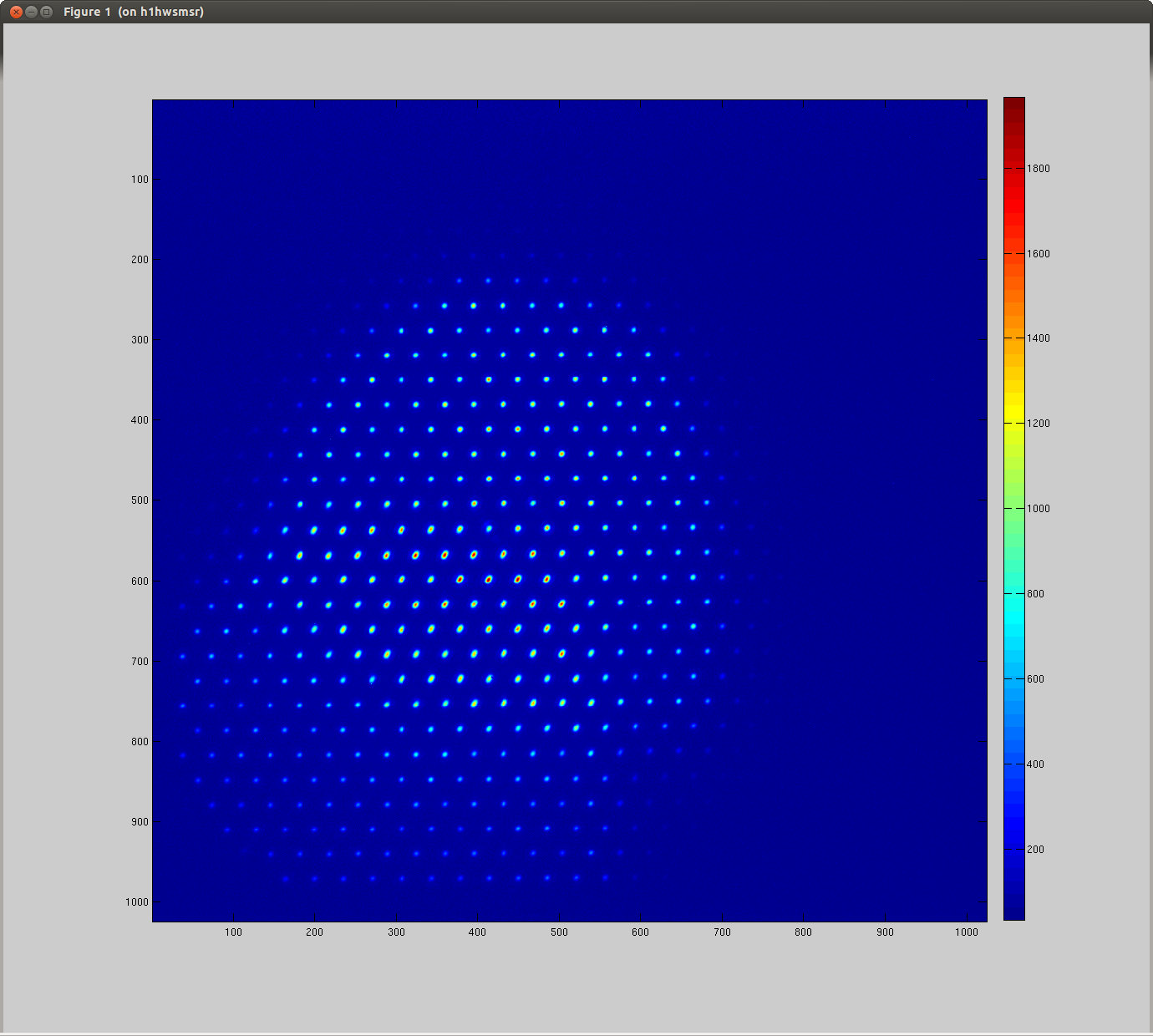

The return beam is shown below. I still need to move the HWS to the conjugate plane of ITMY and determine the magnification between those planes.