Reran all O4b locklosses on the locklost tool with the most up to date code, which includes the "IMC" tag.

The locklooss tool

tags "IMC" if the IMC looses lock within 50ms of the AS_A channel seeing the lockloss (example plots in

80561).

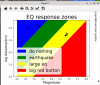

Note that we don't know that the IMC is causing the lockloss but we are sure that this lockloss is different from the typical lockloss where the IMC loses lock ~250ms after the IFO (seen by AS-port).

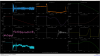

Vicky, Sheila and I did some trending this morning and found no conclusions of whats causing the locklosses, we checked that the fast shutter itself isn't causing the lockloss (via HAM6 SEI GS13's) and it seem like in the milliseconds of the lockloss, the AS power decreases and excess power goes to the REFL port.

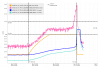

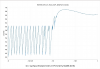

I used the lockloss tool data since the emergency OFI vent (back online ~23rd August) until today and did some excel wizardry (attached) to make the two attached plots, showing the number of locklosses per day tagged with IMC and without tag IMC. I made this plots for just locklosses from Observing and all NLN locklosses (can include commissioning/maintenance times), including:

- No IMC locklosses August to September 13th (Iain checked prior to August G2401576)

- Setpeber 12th: IMC gain redistribution 79998

- October 9th: IMC gain redistribution reverted 80566

- October 15th: NPRO current increased 80687

- Ocotber 18th: NPRO current reverted 80746

lockloss at 10:07 UTC from LOWNOISE_ESD_ETMX, doesn't look like an IMC lockloss

11:03 observing