Summary:

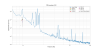

Attached shows a lockloss at around 11:31 PST today (tGPS~1415043099). It seems that the fast shutter, after it was shut, bounced down to momentarily unblock the AS beam at around the time the power peaked.

For this specific lock loss, the energy deposited into HAM6 was about 17J and the energy that got passed the fast shutter is estimated to be ~12J because of the bouncing.

Bouncing motion was known to exist for some time (e.g. alogs 79104 and 79397, the latter has an in-air slow-mo video showing the bouncing), it seems as if the self damping is not working. Could this be an electronics issue or mechanical adjustment issue or something else?

Also, if we ever open HAM6 again (before this fast shutter is decomissioned), it might be a good idea to make the shutter unit higher (shim?) so the beam is still blocked when the mirror reaches its lowest position while bouncing up and down.

Details:

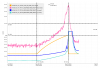

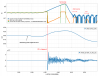

The top panel shows the newly installed lockloss power monitor (blue) and ASC-AS_A_DC_NSUM which monitors the power downstream of the fast shutter (orange).

The shutter was triggered when the power was ~3W or so at around t~0.36 and the ASC-AS_C level drops by a factor of ~1E4 immediately (FS mirror spec is T<1000ppm, seems like it's ~100ppm in reality).

However, 50ms later at t~0.41 or so, the shutter bounced back down and stayed open for about 15ms. Unfortunately this roughly coincided with the time when the power coming into HAM6 reached its maximum of ~760W.

Green is a rough projection of the power that went to OMC (aka "AS_A_DC_NSUM would have looked like this if it didn't rail" trace). This was made by simply multiplying the power mointor itself with AS_A_DC_NSUM>0.1 (1 if true, 0 if false), ignoring the 2nd and 3rd and 4th bouncing.

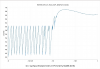

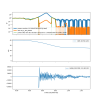

All in all, for this specific lock loss, the energy coming to HAM6 was 16~17J, and the energy that got past FS was about 11~12J because the timing of the bounce VS the power. OMC seems to be protected by the PZT though, see the 2nd attachemt with wider time range,

The time scale of the lock loss spike itself doesn't seem that different from the L1 lock loss in LLO alog 73514 where the power coming to HAM6 peaked tens of ms after AS_A/B/C power appreciably increased.

OMC DCPDs might be OK since they didn't record crazy high current (though I have to say the IN1 should have been constantly railing once we started losing lock, which makes the assessment difficult), and since we've been running with bouncy FS and the DCPDs have been good so far. Nevertheless we need to study this more.