Because we moved the OPO crystal position yesterday, 8045, the crystal absorption will be changing for a few weeks, and we will need to adjust the temperature setting for the OPO until it settles, as Tony and I did last night. 80455. Here are two sets of instructions, one that can be used while in observing to adjust this, and one that can be done when we loose lock or are out of observing for some other reason.

For these first few days, please follow the out of observing instructions when relocking, if this hasn't been done in the last few hours, and please try to do it in the last few hours of the evening shift so that the temperature is close to well tuned at the start of the owl shift.

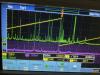

Out of observing instructions (to be done while recovering from a lockloss, can be done while ISC_LOCK is locking the IFO) (screenshot) :

- Make sure that ISC_LOCK will not set SQZ_MANAGER request to INJECT_SQZ, by setting the request to ADS_TO_CAMERAs or any lower state.

- From sitemap, SQZ_OVERVIEW, set SQZ MANGER request to LOCKED_SEED_NLG.

- from the purple !SQZ scopes button, open the NLG scope

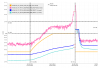

- Once the SQZ manger is in LOCKED_SEED_NLG, you should see that the red OPO_IR_TRANS trace on the scope is oscillating. Adjust the OPO temperature using the slider which is right under the purple SQZ scopes button, to maximize the peak of the oscillations.

- SET_SQZ MANGER request back to FDS_READY_IFO, and once that's ready you can set the ISC_LOCK request to nominal low noise.

Before going to observing, you can adjust the squeezing angle by requesting SQZ_MANAGER to SCAN_SQZANG. It is best practice to do this after the IFO has thermalized, but it can be done early in the lock. If lock stretches are long and this has been done only early in the lock, it may need adjusting for a thermalized IFO. Right now the squeezing angle has a strong frequency dependence early in the lock stretch, so let's not adjust it until the IFO has been locked for at least an hour.

In observing instructions:

Both the OPO temperature and the SQZ phase are ignored in SDF and can be adjusted while we are in observing, but it's important to log the times of any adjustments made.

- To adjust the temperature in full lock, go to the purple SQZ_SCOPE button and choose the OPO temp scope, adjust the OPO temperature to maximize CLF_REFL_RF6_ABS (the green trace).

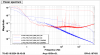

- After doing this it might be necessary to adjust the SQZ PHASE, which can be adjusted in steps of 5 degrees or so. Set this to maximize the squeezing level, which you can do by watching the DARM FDS dtt template. It is available from the SQZ DTT purple button, or on NUC33. It is easiest to adjust this for high frequency squeezing (around 1kHz), this should get you close to good squeezing at 100 Hz.

Changes made to make this easier (Thanks Corey for beta testing the instructions):

- I've commented out the request to SQZ_MANGER that ISC_LOCK makes in low noise length control, because that took control of the squeezer from Corey when he was giving these instructions a trial.

- The OPO temp is not monitored in SDF

- I removed the goto= True from the LOCK_FSS state. This will force SQZ_MANAGER to go through down after the NLG measurement (and turn off the seed PZT ramp).

Locking woes continue. Have had a couple of locks make it all the way past MAX POWER only to have locklosses, but most of our locklosses of late are early ISC LOCK stages. This is some weather. Winds are now in the 30mph range and microseism is above 50th percentile.