TITLE: 12/03 Day Shift: 1530-0030 UTC (0730-1630 PST), all times posted in UTC

STATE of H1: Planned Engineering

OUTGOING OPERATOR: None

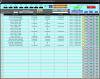

CURRENT ENVIRONMENT:

SEI_ENV state: CALM

Wind: 5mph Gusts, 3mph 3min avg

Primary useism: 0.02 μm/s

Secondary useism: 0.27 μm/s

QUICK SUMMARY:

H1 was IDLE overnight (had troubles getting through DRMI and a few steps beyond yesterday afternoon/eve---see Oli's log). In the middle of an Initial Alignment. Then we'll see about locking...

Day #2 of Crane Inspection work continues: This morning will be work for the South Bay crane, but will also need pendant work; this should go until about noon. (Then remaining cranes would be: Filter Cavity End Station, Staging Bldg, and some gantry cranes.) Yesterday, rest of LVEA cranes were inspected as well as End & Mid Stations.

(it's been almost 15min and Green Arms are almost aligned from Initial Alignment.)

Got word there are issues for PCal at EX (after investigations by Tony & Dave last night).

After the alignment is done, Dave is going to restart models on h1iscex & then after that they will possibly swap back in the 18-bit DAC (after the 20bit DAC was installed yesterday).