TITLE: 10/08 Day Shift: 1430-2330 UTC (0730-1630 PST), all times posted in UTC

STATE of H1: Observing at 152Mpc

OUTGOING OPERATOR: TJ

CURRENT ENVIRONMENT:

SEI_ENV state: CALM

Wind: 4mph Gusts, 3mph 3min avg

Primary useism: 0.01 μm/s

Secondary useism: 0.16 μm/s

QUICK SUMMARY:

H1's been locked 1.25hrs. Quiet environmentally. 2nd day in row of having a dust alarm for the LAB1 500nm alarm (ringing but not active) in the Optics Lab.

Overnight:

1056-1319utc DOWN due to Lockloss from M5.0 Guatamala earthquake. H1 spent 49min trying to get to DRMI w/ no luck before running an alignment & then the alignment was plagued by a glitch SRM with (2) SRM watchdog trips (TJ was awakened by this and alogged this morning). After the SRM hub-bub (glitches from 1215-1228utc), TJ able to have H1 automatically make it back up to Observing.

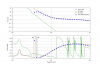

Looks like SRY locked but then immediately lost it, perhaps right on the cusp of having enough signal. ALIGN_IFO goes to DOWN and starts to turn things off, but the L drive from SRCL is still on for ~2.5 seconds. Even after it gets turned off SRM is still swinging. attachment 1

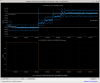

I'm a bit confused how Y is seen to be moving at 1Hz so much when we are only driving L. Looks like this issue has only been happening for a few weeks when I trend the SRM WD trips. We have tripped not only during SRY locking but a few times during DRMI acquisition as well. The timing is very suspicious with the SRM sat amp swap from Sept 23 - alog87103 alog87105.