Based on the PRCL injections I performed yesterday, alog 76805, I decided to try making adjustments of the PRM L2A gains to see if I could make changes to the PRCL/CHARD Y coupling. For the test, I adjusted the gain in the PRM M3 L2Y drivealign bank. I used a ramp time of 30 seconds and started small to avoid locklosses.

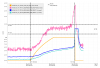

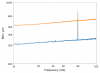

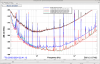

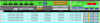

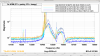

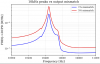

Generally, a positive L2Y gain made the PRCL/DARM coupling worse above 10 Hz. I tried gains of 0.01, 0.03, and 0.1. I saw the PRCL/DARM transfer function increase above 15 Hz at gains of 0.03 and 0.1 (no visible change at 0.01). There was no appreciable change in the PRCL/CHARD Y transfer function, but the coherence decreased as the gain increased. To confirm I wasn't being fooled by thermalization (we were locked for 4.5 hours during these tests), I went back to zero gain to confirm the transfer functions were still the same.

I then tried the negative direction. Gains of -0.03 and -0.1 reduced the PRCL/DARM transfer function above 15 Hz, but with the -0.1 gain I noticed an increase in the coupling below 10 Hz. Again, little change in the PRCL/CHARD transfer function, but a reduction in coherence with increasing negative gain. While I was thinking about what this means, there was a lockloss from ETM saturations. It appears the changing L2A gain caused the lockloss. There was a ring up in the DARM control signal at about 1 Hz. The increased coupling at low frequency is probably to blame.

A comparison of CHARD/PRCL and DARM/PRCL at 0, 0.1 and -0.1 PRM L2Y gain is shown here.

Also to note, during these tests I saw no change in the PRCL/MICH and PRCL/SRCL coupling.

Given that the PRCL/CHARD coupling is not changing appreciably, but the PRCL/DARM coupling is changing is probably a sign that we are incorrectly compensating for some other problem.