WP11766 Shorten SWWD SUS-EY trip time

Dave:

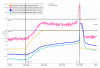

The h1iopsusey model was changed to reduce the SWWD SUS timer from 900s to 600s. This means that the SUS DACKILL time to trip is shortened from 20mins to 15mins and is no longer coincident with the HWWD tripping.

This IOP restart required the restart of all the models on h1susey, and was done in conjuction with the restart of h1susetmy.

After all the models had been running again for a few minutes, h1susey locked up. It was not on the network (no ping, ssh). We fenced it from Dolphin and power cycled it via its IPMI management port.

WP11743 SUS DACKILL Removal

Jeff, Oli, Jim, Dave:

Today we removed the SUS DACKILL from the end station models h1susetmx and h1susetmy. The dolphin ipc receiving models h1isietmx, h1isietmy were modified to replicate a good ipc receiver.

In addition the h1susetmxpi and h1susetmypi models also needed replicated receivers.

Unfortunately I got the ERR replication value incorrect, it should be 0 and not 1. The receiver models were restarted with the correct values.

The first round of restarts required a DAQ restart, the subsequent changes only needed model restarts.

The TMS models were modified later, requiring a second DAQ restart.

WP11761 sw-fces-cds0 Firmware Upgrade

Jonathan:

The firmware on the FCES switch sw-fces-cds0 was upgraded.

WP11765 DMT Upgrade and Reboots

Dan:

This action was canceled for today and will be scheduled for a later maintenance period.

DAQ Restart

Jonathan, Erik, Dave:

The DAQ was restarted partially once, completely twice for the above model changes.

The first restart (partial 0-leg only) at 12:30 highlighted that the PI models were showing IPC Rx errors due to a removed SUS SHMEM channel.

The second full restart at 13:06/13:12 was in support of the SUS-ETM, SUS-ETM-PI and ISI-ETM model changes.

the third full restart at 14:47/14:52 was in support of the SUS-TMS model changes.

There were no major issues with the DAQ restarts, just the usual GDS second restarts for channel

list synchronization.

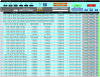

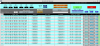

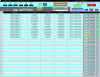

Restart/Reboot Log

Tue12Mar2024

LOC TIME HOSTNAME MODEL/REBOOT

12:14:27 h1seiex h1isietmx <<< SUS-ETM and ISI-ETM model restarts

12:14:58 h1susex h1susetmx

12:15:32 h1seiey h1isietmy

12:16:47 h1susey h1iopsusey <<< EY IOP and all Models restarts

12:17:04 h1susey h1susetmy

12:17:18 h1susey h1sustmsy

12:17:32 h1susey h1susetmypi

12:24:21 h1susey ***REBOOT*** <<< h1susey locked up, needed a reboot

12:26:25 h1susey h1iopsusey

12:26:38 h1susey h1susetmy

12:26:51 h1susey h1sustmsy

12:27:04 h1susey h1susetmypi

12:30:40 h1daqdc0 [DAQ] <<< Partial 0-leg restart

12:30:49 h1daqfw0 [DAQ]

12:30:49 h1daqtw0 [DAQ]

12:30:50 h1daqnds0 [DAQ]

12:30:58 h1daqgds0 [DAQ]

12:34:33 h1daqgds0 [DAQ]

12:50:53 h1susex h1susetmxpi <<< PI model change, remove IPC Rx

12:55:45 h1susey h1susetmypi

13:06:50 h1daqdc0 [DAQ] <<< Full DAQ restart for susetm, susetmpi, isietm models

13:07:01 h1daqfw0 [DAQ]

13:07:01 h1daqtw0 [DAQ]

13:07:02 h1daqnds0 [DAQ]

13:07:10 h1daqgds0 [DAQ]

13:08:42 h1susey h1susetmypi <<< Problem is etmypi build (install too quick), rebuild and install.

13:12:08 h1daqdc1 [DAQ]

13:12:20 h1daqfw1 [DAQ]

13:12:20 h1daqtw1 [DAQ]

13:12:22 h1daqnds1 [DAQ]

13:12:30 h1daqgds1 [DAQ]

13:12:56 h1daqgds1 [DAQ]

13:49:38 h1seiex h1isietmx << Fix the replicated IPC ERR=0

13:55:49 h1seiey h1isietmy

13:58:35 h1susex h1susetmxpi

14:01:46 h1susey h1susetmypi

14:43:40 h1susex h1sustmsx <<< New TMS models

14:44:06 h1susey h1sustmsy

14:47:04 h1daqdc0 [DAQ] <<< DAQ restart for TMS model changes

14:47:15 h1daqfw0 [DAQ]

14:47:16 h1daqnds0 [DAQ]

14:47:16 h1daqtw0 [DAQ]

14:47:24 h1daqgds0 [DAQ]

14:47:50 h1daqgds0 [DAQ]

14:52:21 h1daqdc1 [DAQ]

14:52:33 h1daqfw1 [DAQ]

14:52:33 h1daqtw1 [DAQ]

14:52:34 h1daqnds1 [DAQ]

14:52:42 h1daqgds1 [DAQ]