Elenna, Jenne, Gabriele, Matt, Trent, Louis, Georgia

- Jenne and I checked the fast shutter and ran the fast_shutter test (SYS -> AS port protection -> run)

- After the initial alignment (alog here) we got through green arms locking at up to CHECK_MICH_FRINGES. When we tried to do PRMI there was a timer that kept taking it back to MICH fringes since the POP90 buildups were so poor. On the AS_AIR camera the alignment looks bad in yaw.

- We edited ISC_LOCK line 1353 to lower the threshold for PRMI flashes on POPAIR_B_RF90 from 20 to 4.

- We ran the dark offsets script with the IMC offline and the ALS shuttered (sdf screenshots here), and started locking again.

- The Y arm was finicky, we struggled to get the flashes above 0.6... We lowered the PD threshold in the ALS Y PDH (H1:ALS-Y_REFL_LOCK_TRANSPDNORMTHRESH) from 0.7 to 0.6.

- We also lowered the threshold in ALS_ARM gen_INCREASE_FLASHES from 0.9 to 0.6.... feels like cheating, maybe the alignment onto ISCT1 is off? The camera alignment for ALS_Y also looks bad (see screenshot)

- Even though we did an initial alignment earlier today, the PRMI flashes are bad and the MICH fringes don't look great. PRMI_ASC caused a couple of locklosses, so we took the guardian to PRMI_LOCKED and tried aligning it by hand. Elenna aligned PRM and BS, but the buildups and camera indicated bad pitch alignment.

- We re-ran initial alignment, and then the guardian took us straight up to PRMI_ASC, DRMI also locked easily! POPAIR_RF18 was a little noisy.

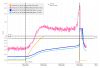

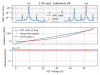

- We started the CARM offset reduction sequence but lost lock at DHARD_WFS, the two arms were very different (higher build up for Y arm), when DHARD came on the arms were pulled closer together but we lost RF9, and we lost lock.

- We decided to stop at DARM_TO_RF, step the X arm (X hard) closer using the move_arm_dev script and fix PRM and SRM alignment to increase the buildups.

- While we stepped the arm and adjusted PRM, the green arms lost lock. We think we might be aligning the whole IFO to some bad ITM alignments (how accurately aligned are they after the baffle PD alignment script?)

We decided to call it a night at this point.

HAM8 scans collected at T240018

RGA tree was baked at 150C for 5 days following the replacement of leaking calibrated leak with a blank.