day 1 (alog 75548), day 2 (alog 75557), day 3 (alog 75575)

HAM7 irises were good

Sheila/Camilla checked the iris position on HAM7 and it was good.

ASC-AS_C whitening gain was increased by 18dB, dark offset was reset

I didn't like that the ASC-AS_C input was so small. Increased the whitening gain by 18dB (from nominal 18dB to 36dB) and reset the dark offset.

Recentered the beam on ASC-AS_C. One thing that was strange was that the ASC-AS_C_NSUM would become MUCH bigger (like a factor of 10) when SRM is misaligned. I was worried that I was looking at a ghost beam. Camilla measured the beam power to be ~1mW out of HAM7 and ~0.7mW into HAM6. When ASC-AS_C was centered, ASC-AS_C_NSUM_OUT became ~0.008 give or take some. Taking the 16dB extra whitening (i.e. a factor of 8) into account, ASC-AS_C_NSUM~0.008 means about 1mW into HAM6, which was in a ballpark, so I convinced myself that the beam on AS_C was good.

HAM6 irises and beam height on OM, the beam was still very low on OM2

At this point OMC QPDs are reasonably centered, so Sheila and Camilla checked the beam position on irises in HAM6.

The beam was OK on the first iris but was a bit low (~2mm) on the iris closer to the OM1.

The beam position on OMs at this point as well as the slider values and max DAC output are listed below (see Camilla's pictures, too). Note that the YAW position in the table is the position of the incoming beam on the mirror measured at some (unknown) distance, it's not on the mirror.

| |

OM1 |

OM2 |

OM3 |

| Beam height (nominal 4") |

1/8" too high |

1/4" too low |

1/16" too low |

| YAW position |

1/8" to +X |

1/32" to -X |

Cannot measure |

| PIT slider |

430 |

20 |

610 |

| YAW slider |

600 |

1300 |

-231 |

| Max DAC output |

11k |

7k |

9k |

The beam was clearing the input and output hole on the shroud, was cleanly hitting the small OMCR steering mirror by the cage, and was already going to the OMCR diode.

They confirmed that the OMC trans video beam was visible on the viewer card when OMC flashes and it was hitting the steering mirror (but we need a viewport simulator to see if the beam will clear the viewport aperture).

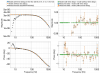

Bringing the beam up higher on OM2

Unfortunately the beam was still very low (~1/4"), however I was able to use OM1 alignment slider to bring the beam up on OM2 and use OM2/3 alignment sliders to still center the OMC QPDs. After this was done, OM2 PIT offset became large but OM1/OM3 offsets became low-ish. This was a very good sign as it's infinitely easier to mechanically tilt OM2 than OM1/OM3 due to superior mechanical design.

Anyway, by doing this, the beam height on OM2 went up by about 1/8" (see Rahul's pictures). It's still too low by 1/8", but bringing the beam up more would mean that OM3 DAC output will become large w/o mechanically relieving, which I didn't want to do, so I decided to stay here.

| |

OM1 |

OM2 |

OM3 |

| Beam height |

1/8" too high (didn't measure, no reason to suspect that it changed) |

1/8" too low |

1/16" too low |

| PIT slider |

20 |

2710 |

-590 |

| YAW slider |

650 |

660 |

60 |

| Max DAC output |

7.2k |

21k |

7.1k |

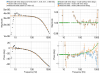

Mechanically relieving the OM2 PIT offset

Julian set the OM2 PIT slider gain to 0.75 (from 1), Rahul turned the balance mass screw on the upper mass of OM2 to compensate. We repeated the same thing four times (slider gain 0.75->0.5->0.25->0, each step followed by Rahul's mechanical adjustment). We had to adjust OM2 Y slider in the process to bring the beam back to the center of the OMC QPDs, but overall, this was a really easy process (did I mention that tip-tilt adjustment is not an easy thing to do?).

We ended up with this (we haven't measured the beam height again as OM2 was the only thing that moved, so the height numbers are from the previous table just for convenience).

| |

OM1 |

OM2 |

OM3 |

|

Beam height (didn't measure, no reason to suspect that they changed)

|

1/8" high |

1/8" low |

1/16" low |

| PIT slider |

20 |

0 |

-590 |

| YAW slider |

650 |

760 |

60 |

| MAX DAC output |

7.2k |

5k |

7.1k |

I declared that this is a good place to stay. Rahul fixed the balance mass on OM2 upper mass.

Rahul also fixed the balance mass on OMCS.

Fast shutter path, WFS path, ASAIR path, OMCR path

We closed the fast shutter and the reflected beam goes to the high power beam dump.

We opened the fast shutter and checked the WFS path. The beam was already hitting one quadrant of WFSB but was entirely missing the WSFA. The beam was a bit low on the lens on the WFS sled, so I used two fixed 1" steering mirrors upstream of the WFS sled to move the beam up on the lens and keep the path reasonably level. See Rahul's pictures for the beam height. After this, both WFSs saw the light, and at this point we used pico to center both. We weren't able to see the beam reflected by the WFS but assume that it still hit the black glass.

We tried to see the ASAIR beam but couldn't. Since the beam is hitting the center of ASC-AS_C, we assume that the ASAIR beam will still hit the black glass.

OMCR beam was already hitting the OMCR photodiode, but the beam was REALLY close to the beam dump that's supposed to catch ghost beam. We temporarily moved the dump so the beam is about 5mm from the edge of the glass, but this might be too far. I'll find how close it's supposed to be from the past alog.

Couldn't check if PZT1 is working.

We tried to see if OMC length error signal makes sense when scanning the OMC length, but whenever the OMC is close to resonance there was a huge transient in the DCPD SUM as well as the length signal, probably the intensity noise (due to acoustics or jitter or something) is too much. We'll measure the capacitance of the PZT from outside.

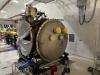

Current status of LVEA

Laser hazard, HAM5 GV is closed, HAM6 and HAM7 curtains are closed.

Remaining tasks

- Laser is required: Move the irises on HAM6 to the current beam position.

- Laser is required:

OMCR path (beam dump position).

- Laser is required:

OMC trans video path. Use viewport simulator.

Check OSEMs of OMCS and OMs, recenter if needed.Restore the shroud panels.Restore the OMC trans beamdump on the -Y side.- PZT1 capacitance check, grounding check etc.