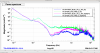

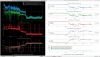

I stole the filter that Huyen used for her HAM1 feedforward test, and tried it on the LHO HAM1, with a gain tweak of 17% (ie I multiplied by a gain of 1.17 vs LLO ) to match my measurements and it seems to work well. I was having difficulty with my hand fits with excess low frequency noise or gain peaking where I tried to roll my filters off. First plot shows the on/ off asds for the HAM2 sts and the HAM1 Z HEPI l4cs. I don't know if I quite believe the improvement below 1 hz is due to the feedforward, but Huyen said she got improvement kind of 1-70hz and that seems to be the case here as well. I'm leaving this filter on overnight, to get good low frequency data.

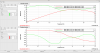

Second image compares one of my filters(red) with the (green) LLO filter. One thing I still don't understand is that my filters have often caused broad low frequency noise, but the LLO filter doesn't seem to. We don't currently have a noise model for HEPI that allows modeling the ff performance, that would help a lot.

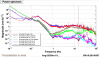

Attaching long spectra comparing HAM1 with the LLO feedforward. Still looks pretty good, we should run with this. Live traces are with the feedforward on, refs are with it off, so the improvement in the HAM1 HEPI l4cs is brown to bright green, so something like a factor of 20 improvement at 15 hz, which is the frequency where HAM1 just catches briefly up to the performance of HAM 2 and 4 with this feedforward running.

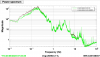

Second plot compares CHARD pitch asds during these same times. The improvement here is less dramatic, but there is still a factor of almost 2 around 8-9 hz. I suspect the low frequency (.1hz and below) differences is due to wind. I looked at cal deltal, but it seems that squeezing was off or something during my reference time, there's a lot of extra signal above 10 hz.