Summary:

This is my attempt to constrain the mode matching parameter of the IFO beam on the OMC to see if we'll do any better as far as MM is concerned if we have even "colder" OM2. This is somewhat of a moot point because, empirically, the noise is lower with hot OM2 than cold though the mode matching is the opposite, but anyway, if we solve the noise problem/mystery we could think about improving the MM loss.

The answer is, it depends. We might gain some if we're lucky, but not much, 1% or 2 at most.

Details:

From Louis' calibration comparison with hot VS cold OM2 (alog 70907), we know that the optical gain increases by about a factor of 1.02 for cold OM2 relative to hot. Given the same DC power coming through the OMC (because of DARM loop), optical gain is proportional to the square root of (1-MMLoss).

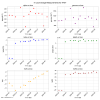

Imagine that the light falling on the OMC is characterized by the waist position offset from OMC's waist (normalized by 2*Rayleigh of OMC) and the waist size (normalized by the waist size of the OMC). Pick an arbitrary point in this MM parameter space (posHot, sizeHot) and measure the MM loss, assuming that OM2 is hot. Calculate the beam parameter upstream of OM2. Change the OM2 ROC to cold number, propagate the upstream beam down to OMC again. This light is represented by a different (posCold, sizeCold). Measure the MM loss.

If sqrt((1-coldLoss)/(1-hotLoss)) is close enough to the measured optical gain ratio of 1.02, the pair of points (posHot, sizeHot) and (posCold, sizeCold) are compatible with the measurement. If you do this for all possible (posHot, sizeHot), you'll obtain two arcs, one corresponding to hot and other corresponding to cold OM2 that are compatible with the measurement. (If it's hard to visualize this, you might want to read my alog 71145, which does a different calculation but is based on a similar mode matching model.)

In the first attached, the lowest and the middle arc correspond to hot and cold OM2. Green arrows show the change from hot to cold for a selected pairs of pixels. Note that this doesn't constrain the loss itself. As an arbitrary constraint to save calculation time I assumed that the MM loss is 20%.

Now, given that reality is somewhere on the middle arc, could we gain anything by making OM2 colder? I quickly added more cooling (OM2 ROC=2.25m), and that's represented by the top arc and red arrows.

In the second attached, which is the same as the first one but with my hand-scribble, if we're on the right half(-ish) encircled with cyan line, making OM2 colder won't do us any good. If OTOH we're on the left half(-ish) encircled with orange line, colder OM2 will give us some (but not a huge) gain.

Third plot shows the MM loss of the cold OM2 arc on the X axis and colder OM2 MM loss on the Y axis. It just shows you that if e.g. the reality is ~1% MM loss for the cold OM2, if we're lucky (i.e. inside the orange-encircled part of the arc) the loss will go down to ~0.5+-0.2% or so if OM2 is even colder, but if we're unlucky (i.e. cyan-encircled part of the arc) the loss will go up to ~1.3+-0.3%. If the reality is 10% MM loss for the cold OM2, by going colder you'll get either 8.5% or 11.7%.

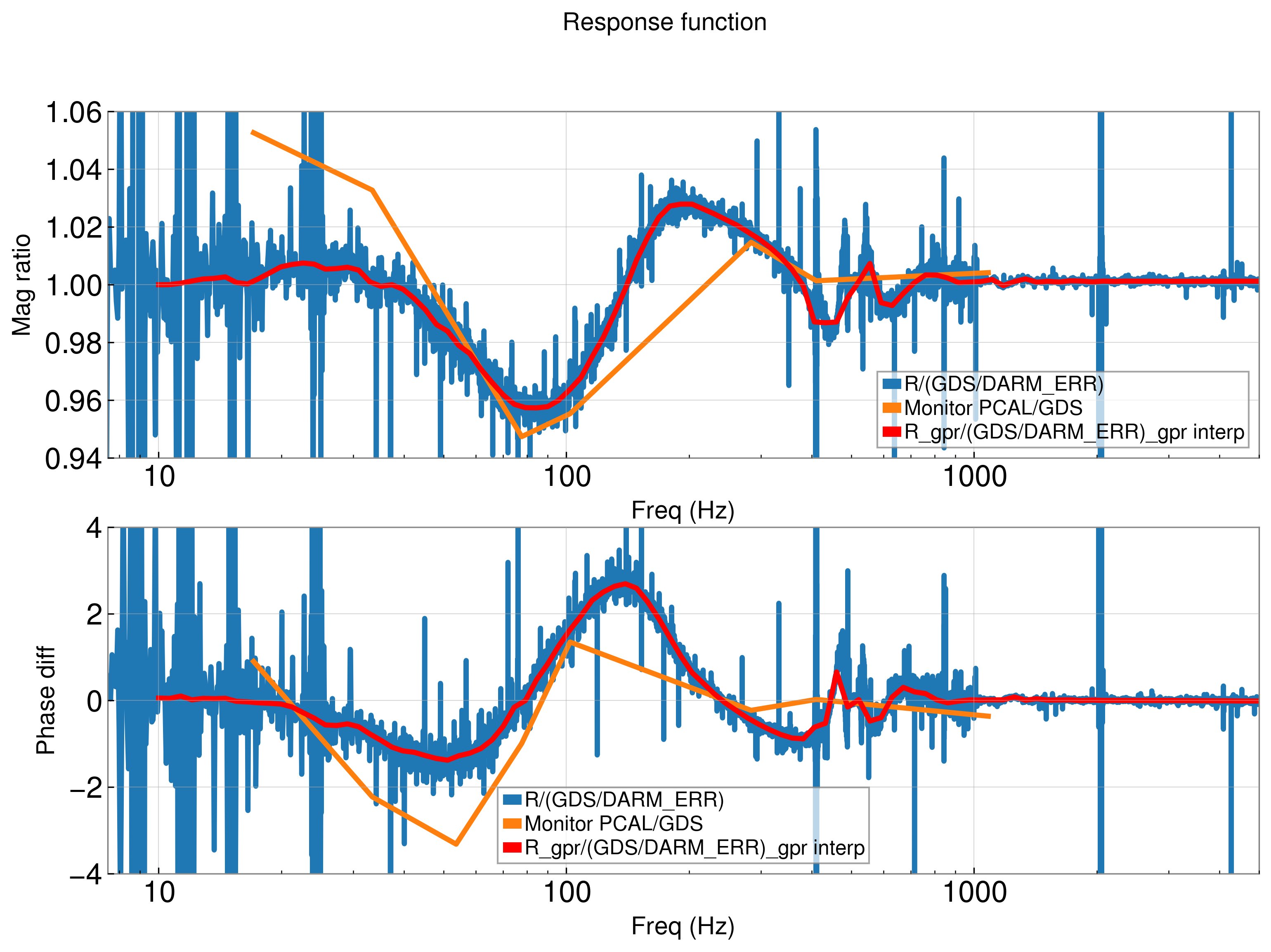

(I was hoping that SR3 heater data (Daniel's alog 68884) will further constrain the parameters, but it turns out that we should have waited longer before turning off the heater. If you look at the calibration factor corresponding to the optical gain, it seemed as if there was no change, certainly nothing larger than 0.2% (last plot). However, this was expected as 2W for 10 minutes only gives us 1.22uD (using 4.75uD/W in LLO alog 27262 and 70 min time constant in Aidan's comment in Daniel's alog), which basically makes zero % MM loss change if you start from cold OM2. If we waited long enough, we'd have reached 9.5uD. By looking at how much the MM loss got worse with 9.5uD, we should be able to exclude some area from the arcs.)

The drop out of observing at 3:29 UTC and 6:42 UTC seems due to SDF diff of syscssqz according to the attached DIAG_SDF log. The SQZ TTFSS COMGAIN and FASTGAIN changed at that time, but they are not monitored now (alog72915), so it should be another SDF diff related to the TTFSS change.