Follow up to Ryan C. alog. Previously when running into issues engaging DRMI ASC, we would wait in ENGAGE DRMI ASC for a few minutes to let the signals converge. In this case, we would lose lock after a few seconds, we noticed that a few ASC signals would start to converge then suddenly swing wildly, causing the lockloss. These were most apparent in the MICH, SRC1, and PRC2 signals, both in P and Y. Keita and I decided to try and walk the suspensions that feed into these signals, as their signal input was well into the thousands (into the tens of thousands particularly for PRC2). After attempting to walk the BS (MICH), SRM (SRC1), and PRC2 (PR2), we were able to get the ASC signals closer to 0, however this didn't appear to help once we went back to ENGAGE DRMI ASC.

Here are the original values for the 3 suspensions I moved in case they need to be reverted at a future time:

PR2 P: 1582.4 Y: 3236.0

BS P: 98.61 Y: -395.81

SRM P: 2228.3 Y: -3144.0

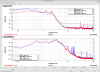

This image is showing the the ASC signals looked like pre movement of the three suspensions, and this is what the signal looked like post movement (sorry these scopes have poor scaling). Keita suggested we start looking into the ASC loops themselves, particularly at SRC2. Before the DRMI ASC loops turned on we turned OFF the SRC2 servo, then went back to ENGAGE DRMI ASC. This seemed to be able to hold us in ENGAGE ASC. At this point, we tried turning the SRC2 loop back on, but with a halved gain for both P/Y (P original gain was 60, Y was 100). When turning on the servo with half the gain, we were still seeing a good amount of 1hz motion in the signal, so we tried setting the gain to be a quarter of the original value...same result.

Next, we tried halving the SRC1 gains, from 4, down to 2. Then, we tried to add back in the quartered SRC2 gains, which still yielded the same result. At this point, it was decided that we entirely leave the SRC2 loop off, while keeping the halved SRC1 P/Y loops and try to continue locking - this worked and we were able to continue locking. Eventually, guardian took over the ASC loops once we got to ENGAGE ASC, and we had no issues relocking afterwards. This should be looked at tomorrow during the day, but at least now we have a temporary workaround if we lose lock again.

Steps taken to bypass this issue - Tagging OpsInfo:

1) Wait in DRMI LOCKED PREP ASC

2) Turn OFF the SRC2 P/Y loops

3) Set SRC1 P/Y gains to 2 (originally 4) - note this step was us taking extra safe steps to err on the side of caution since the SRC2 oscillation was coupling into SRC1

4) Continue the locking process - guardian will eventually take over and set the control loops to their nominal state

Back to NLN @ 10:12 UTC.